Fairness and Explainability with SageMaker Clarify using AWS SDK for Python (Boto3)

This notebook’s CI test result for us-west-2 is as follows. CI test results in other regions can be found at the end of the notebook.

Runtime

This notebook takes approximately 30 minutes to run.

Contents

Overview

Amazon SageMaker Clarify helps improve your machine learning models by detecting potential bias and helping explain how these models make predictions. The fairness and explainability functionality provided by SageMaker Clarify takes a step towards enabling AWS customers to build trustworthy and understandable machine learning models. The product comes with the tools to help you with the following tasks.

Measure biases that can occur during each stage of the ML lifecycle (data collection, model training and tuning, and monitoring of ML models deployed for inference).

Generate model governance reports targeting risk and compliance teams and external regulators.

Provide explanations of the data, models, and monitoring used to assess predictions.

In doing so, the notebook first trains a SageMaker XGBoost model using training dataset, then utilizes the AWS SDK for Python to launch SageMaker Clarify jobs to analyze an example dataset in CSV format. Additionally, there are peer examples available that use the SageMaker Python SDK to launch SageMaker Clarify jobs to analyze data in CSV format and in JSON Lines format.

Prerequisites and Data

Import libraries

[1]:

import pandas as pd

import numpy as np

import os

import boto3

import time

from datetime import datetime

from sagemaker import get_execution_role, session

Set configurations

[2]:

# Initialize sagemaker session

sagemaker_session = session.Session()

region = sagemaker_session.boto_region_name

print(f"Region: {region}")

role = get_execution_role()

print(f"Role: {role}")

bucket = sagemaker_session.default_bucket()

prefix = "sagemaker/DEMO-sagemaker-clarify-boto3"

Region: ap-south-1

Role: arn:aws:iam::000000000000:role/service-role/SMClarifySageMaker-ExecutionRole

Download data

Data Source: https://archive.ics.uci.edu/ml/machine-learning-databases/adult/

Let’s download the data and save it in the local folder with the name adult.data and adult.test from UCI repository\(^{[2]}\).

\(^{[2]}\)Dua Dheeru, and Efi Karra Taniskidou. “UCI Machine Learning Repository”. Irvine, CA: University of California, School of Information and Computer Science (2017).

[3]:

from sagemaker.s3 import S3Downloader

adult_columns = [

"Age",

"Workclass",

"fnlwgt",

"Education",

"Education-Num",

"Marital Status",

"Occupation",

"Relationship",

"Ethnic group",

"Sex",

"Capital Gain",

"Capital Loss",

"Hours per week",

"Country",

"Target",

]

if not os.path.isfile("adult.data"):

S3Downloader.download(

s3_uri="s3://{}/{}".format(

f"sagemaker-example-files-prod-{region}", "datasets/tabular/uci_adult/adult.data"

),

local_path="./",

sagemaker_session=sagemaker_session,

)

print("adult.data saved!")

else:

print("adult.data already on disk.")

if not os.path.isfile("adult.test"):

S3Downloader.download(

s3_uri="s3://{}/{}".format(

f"sagemaker-example-files-prod-{region}", "datasets/tabular/uci_adult/adult.test"

),

local_path="./",

sagemaker_session=sagemaker_session,

)

print("adult.test saved!")

else:

print("adult.test already on disk.")

adult.data saved!

adult.test saved!

Loading the data: Adult Dataset

From the UCI repository of machine learning datasets, this database contains 14 features concerning demographic characteristics of 45,222 rows (32,561 for training and 12,661 for testing). The task is to predict whether a person has a yearly income that is more or less than $50,000.

Here are the features and their possible values:

Age: continuous.

Workclass: Private, Self-emp-not-inc, Self-emp-inc, Federal-gov, Local-gov, State-gov, Without-pay, Never-worked.

Fnlwgt: continuous (the number of people the census takers believe that observation represents).

Education: Bachelors, Some-college, 11th, HS-grad, Prof-school, Assoc-acdm, Assoc-voc, 9th, 7th-8th, 12th, Masters, 1st-4th, 10th, Doctorate, 5th-6th, Preschool.

Education-num: continuous.

Marital-status: Married-civ-spouse, Divorced, Never-married, Separated, Widowed, Married-spouse-absent, Married-AF-spouse.

Occupation: Tech-support, Craft-repair, Other-service, Sales, Exec-managerial, Prof-specialty, Handlers-cleaners, Machine-op-inspct, Adm-clerical, Farming-fishing, Transport-moving, Priv-house-serv, Protective-serv, Armed-Forces.

Relationship: Wife, Own-child, Husband, Not-in-family, Other-relative, Unmarried.

Ethnic group: White, Asian-Pac-Islander, Amer-Indian-Eskimo, Other, Black.

Sex: Female, Male.

Note: this data is extracted from the 1994 Census and enforces a binary option on Sex

Capital-gain: continuous.

Capital-loss: continuous.

Hours-per-week: continuous.

Native-country: United-States, Cambodia, England, Puerto-Rico, Canada, Germany, Outlying-US(Guam-USVI-etc), India, Japan, Greece, South, China, Cuba, Iran, Honduras, Philippines, Italy, Poland, Jamaica, Vietnam, Mexico, Portugal, Ireland, France, Dominican-Republic, Laos, Ecuador, Taiwan, Haiti, Columbia, Hungary, Guatemala, Nicaragua, Scotland, Thailand, Yugoslavia, El-Salvador, Trinadad&Tobago, Peru, Hong, Holand-Netherlands.

Next, we specify our binary prediction task:

Target: <=50,000, >$50,000.

[4]:

training_data = pd.read_csv(

"adult.data", names=adult_columns, sep=r"\s*,\s*", engine="python", na_values="?"

).dropna()

testing_data = pd.read_csv(

"adult.test", names=adult_columns, sep=r"\s*,\s*", engine="python", na_values="?", skiprows=1

).dropna()

training_data.head()

[4]:

| Age | Workclass | fnlwgt | Education | Education-Num | Marital Status | Occupation | Relationship | Ethnic group | Sex | Capital Gain | Capital Loss | Hours per week | Country | Target | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 39 | State-gov | 77516 | Bachelors | 13 | Never-married | Adm-clerical | Not-in-family | White | Male | 2174 | 0 | 40 | United-States | <=50K |

| 1 | 50 | Self-emp-not-inc | 83311 | Bachelors | 13 | Married-civ-spouse | Exec-managerial | Husband | White | Male | 0 | 0 | 13 | United-States | <=50K |

| 2 | 38 | Private | 215646 | HS-grad | 9 | Divorced | Handlers-cleaners | Not-in-family | White | Male | 0 | 0 | 40 | United-States | <=50K |

| 3 | 53 | Private | 234721 | 11th | 7 | Married-civ-spouse | Handlers-cleaners | Husband | Black | Male | 0 | 0 | 40 | United-States | <=50K |

| 4 | 28 | Private | 338409 | Bachelors | 13 | Married-civ-spouse | Prof-specialty | Wife | Black | Female | 0 | 0 | 40 | Cuba | <=50K |

Data inspection

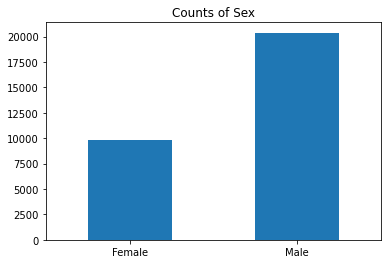

[5]:

%matplotlib inline

training_data["Sex"].value_counts().sort_values().plot(kind="bar", title="Counts of Sex", rot=0)

[5]:

<AxesSubplot:title={'center':'Counts of Sex'}>

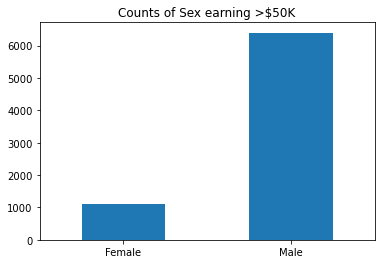

[6]:

training_data["Sex"].where(training_data["Target"] == ">50K").value_counts().sort_values().plot(

kind="bar", title="Counts of Sex earning >$50K", rot=0

)

[6]:

<AxesSubplot:title={'center':'Counts of Sex earning >$50K'}>

Encode and Upload the Dataset

Here we encode the training and test data. Encoding input data is not necessary for SageMaker Clarify, but is necessary for the model.

[7]:

from sklearn import preprocessing

def number_encode_features(df):

result = df.copy()

encoders = {}

for column in result.columns:

if result.dtypes[column] == np.object:

encoders[column] = preprocessing.LabelEncoder()

result[column] = encoders[column].fit_transform(result[column].fillna("None"))

return result, encoders

training_data = pd.concat([training_data["Target"], training_data.drop(["Target"], axis=1)], axis=1)

training_data, _ = number_encode_features(training_data)

training_data.to_csv("train_data.csv", index=False, header=False)

testing_data, _ = number_encode_features(testing_data)

test_features = testing_data.drop(["Target"], axis=1)

test_target = testing_data["Target"]

test_features.to_csv("test_features.csv", index=False, header=False)

A quick note about our encoding: the “Female” Sex value has been encoded as 0 and “Male” as 1.

[8]:

training_data.head()

[8]:

| Target | Age | Workclass | fnlwgt | Education | Education-Num | Marital Status | Occupation | Relationship | Ethnic group | Sex | Capital Gain | Capital Loss | Hours per week | Country | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 39 | 5 | 77516 | 9 | 13 | 4 | 0 | 1 | 4 | 1 | 2174 | 0 | 40 | 38 |

| 1 | 0 | 50 | 4 | 83311 | 9 | 13 | 2 | 3 | 0 | 4 | 1 | 0 | 0 | 13 | 38 |

| 2 | 0 | 38 | 2 | 215646 | 11 | 9 | 0 | 5 | 1 | 4 | 1 | 0 | 0 | 40 | 38 |

| 3 | 0 | 53 | 2 | 234721 | 1 | 7 | 2 | 5 | 0 | 2 | 1 | 0 | 0 | 40 | 38 |

| 4 | 0 | 28 | 2 | 338409 | 9 | 13 | 2 | 9 | 5 | 2 | 0 | 0 | 0 | 40 | 4 |

Lastly, let’s upload the data to S3.

[9]:

from sagemaker.s3 import S3Uploader

from sagemaker.inputs import TrainingInput

train_uri = S3Uploader.upload(

local_path="train_data.csv",

desired_s3_uri="s3://{}/{}".format(bucket, prefix),

sagemaker_session=sagemaker_session,

)

train_input = TrainingInput(train_uri, content_type="csv")

test_uri = S3Uploader.upload(

local_path="test_features.csv",

desired_s3_uri="s3://{}/{}".format(bucket, prefix),

sagemaker_session=sagemaker_session,

)

Train XGBoost Model

Train Model

Since our focus is on understanding how to use SageMaker Clarify, we keep it simple by using a standard XGBoost model. For this section we will be using Amazon SageMaker Python SDK for simplicity.

It takes about 5 minutes for the model to be trained.

[10]:

from sagemaker.image_uris import retrieve

from sagemaker.estimator import Estimator

# This is references the AWS managed XGBoost container

xgboost_image_uri = retrieve(region=region, framework="xgboost", version="1.5-1")

xgb = Estimator(

xgboost_image_uri,

role,

instance_count=1,

instance_type="ml.m5.xlarge",

disable_profiler=True,

sagemaker_session=sagemaker_session,

)

xgb.set_hyperparameters(

max_depth=5,

eta=0.2,

gamma=4,

min_child_weight=6,

subsample=0.8,

objective="binary:logistic",

num_round=800,

)

xgb.fit({"train": train_input}, logs=False)

INFO:sagemaker:Creating training-job with name: sagemaker-xgboost-2023-01-19-01-01-28-728

2023-01-19 01:01:28 Starting - Starting the training job...

2023-01-19 01:01:46 Starting - Preparing the instances for training......

2023-01-19 01:02:23 Downloading - Downloading input data....

2023-01-19 01:02:48 Training - Downloading the training image...

2023-01-19 01:03:08 Training - Training image download completed. Training in progress.......

2023-01-19 01:03:44 Uploading - Uploading generated training model.

2023-01-19 01:03:55 Completed - Training job completed

Create Model

Here we create the SageMaker model.

[11]:

model_name = "DEMO-clarify-xgboost-model"

model = xgb.create_model(name=model_name)

container_def = model.prepare_container_def()

sagemaker_session.create_model(model_name, role, container_def)

INFO:sagemaker:Creating model with name: DEMO-clarify-xgboost-model

[11]:

'DEMO-clarify-xgboost-model'

Amazon SageMaker Clarify

With your model set up, it’s time to explore SageMaker Clarify. For a general overview of how SageMaker Clarify processing jobs work, refer to the provided link. This section will demonstrate how to use the AWS SDK for Python (Boto3) to launch SageMaker Clarify processing jobs.

Set Configurations

[12]:

# Initialise SageMaker boto3 client

sagemaker_client = boto3.Session().client("sagemaker")

# Note: We will be using role fetched in section 1 for convenient/demo purpose so that the notebook

# can be easily executed in SageMaker Studio or SageMaker Notebook Instance.

# You can use their own execution role for their project.

print(f"Role: {role}")

print(f"Region: {region}")

INFO:botocore.credentials:Found credentials from IAM Role: BaseNotebookInstanceEc2InstanceRole

Role: arn:aws:iam::000000000000:role/service-role/SMClarifySageMaker-ExecutionRole

Region: ap-south-1

Get Started with a SageMaker Clarify Container

Amazon SageMaker provides prebuilt SageMaker Clarify container images that include the libraries and other dependencies needed to compute bias metrics and feature attributions for explainability. This image has been enabled to run SageMaker Clarify processing job in your account.

The following code uses the SageMaker Python SDK API to easily retrieve the image URI. If you are unable to use the SageMaker Python SDK, you can find the image URI by referring to the regional image URI page.

[13]:

clarify_image_uri = retrieve(region=region, framework="clarify", version="1.0")

print(f"Clarify Image URI: {clarify_image_uri}")

INFO:sagemaker.image_uris:Ignoring unnecessary instance type: None.

Clarify Image URI: 452307495513.dkr.ecr.ap-south-1.amazonaws.com/sagemaker-clarify-processing:1.0

Detecting Bias

SageMaker Clarify helps you detect possible pre-training and post-training biases using a variety of metrics.

Configure a SageMaker Clarify Processing Job Container’s Input and Output Parameters

The Processing Job requires that you specify the following input parameters: a dataset files with input name “dataset” as Amazon S3 object or prefix, and an analysis configuration file with input name “analysis_config” as an Amazon S3 object. The job also requires an output parameter: the output location as an Amazon S3 prefix.

[14]:

bias_analysis_config_path = "s3://{}/{}/bias_analysis_config.json".format(bucket, prefix)

bias_analysis_result_path = "s3://{}/{}/bias_analysis_output".format(bucket, prefix)

Configure Analysis Config

The inputs for the analysis are configured by the parameters of the ProcessingInput API. The “analysis_config” value of the input_name specifies the JSON file that contains the configuration values. The path to the JSON file is provided in the source parameter of ProcessingInput. More details on configuring an analysis config can be referred here.

For our example use case we will be using the following analysis config:

[15]:

!echo

!cat analysis_config/bias_analysis_config.json

{

"dataset_type": "text/csv",

"headers": ["Target", "Age", "Workclass", "fnlwgt", "Education", "Education-Num", "Marital Status", "Occupation", "Relationship", "Ethnic group", "Sex", "Capital Gain", "Capital Loss", "Hours per week", "Country"],

"label": "Target",

"label_values_or_threshold": [1],

"facet": [{

"name_or_index": "Sex",

"value_or_threshold": [0]

}],

"group_variable": "Age",

"probability_threshold": 0.8,

"methods": {

"pre_training_bias": {

"methods": "all"

},

"post_training_bias": {

"methods": "all"

},

"report": {

"name": "report",

"title": "Analysis Report"

}

},

"predictor": {

"model_name": "DEMO-clarify-xgboost-model",

"instance_type": "ml.m5.xlarge",

"initial_instance_count": 1,

"accept_type": "text/csv",

"content_type": "text/csv"

}

}

bias_analysis_config.json here contains configuration values for detecting bias using a clarify container:

dataset_typespecifies the format of your dataset, for this example as we are using CSV dataset this will betext/csvheadersis the list of column names in the datasetlabelspecifies the ground truth label, which is also known as observed label or target attribute. It is used for many bias metrics. In this example, the “Target” column has the ground truth label.facet: SageMaker Clarify also needs information on what the sensitive columns (facets) are, what the sensitive features (facet: values_or_threshold) may be, and what the desirable outcomes are (label_values_or_threshold). SageMaker Clarify can handle both categorical and continuous data forfacet: values_or_thresholdand forlabel_values_or_threshold. In this case we are using categorical data. This example has selected the sensitive attributeSexto analyze. The results will show if the model has a preference for records of one sex over the other.group_variable: This example has selected the “Age” column which is used to form subgroups for the measurement of bias metric Conditional Demographic Disparity (CDD) or Conditional Demographic Disparity in Predicted Labels (CDDPL).probability_thresholdis to indicate the threshold to select the binary label in the case of binary classification. XGBoost model outputs probabilities of samples, so SageMaker Clarify invokes the endpoint then usesprobability_thresholdto convert the probability to binary labels for bias analysis. Prediction above the threshold is interpreted as label value1and below or equal as label value0.methodsis the list of methods and their parameters for the analyses and reports. If any section is omitted, then it is not computed.pre_training_bias: Pre-training bias metrics to be computed. The detailed description of the metrics can be found on Measure Pre-training Bias. This example sets methods to “all” to compute all the pre-training bias metrics.post_training_bias: Post-training bias metrics to be computed. The detailed description of the metrics can be found on Measure Post-training Bias. This example sets methods to “all” to compute all the post-training bias metrics.

predictorincludes model configuration, this section is required if the analysis requires predictions from modelmodel_name: name of the concerned model, using name of the xgboost model trained earlier,DEMO-clarify-xgboost-modelinstance_typeandinitial_instance_countspecify your preferred instance type and instance count used to run your model on during SageMaker Clarify’s processing. The example dataset is small, so a single standard instance is good enough to run this example.accept_typedenotes the endpoint response payload format, andcontent_typedenotes the payload format of request to the endpoint. As per the example model we created above both of these will betext/csv

[16]:

# Upload the analysis_config to the concerned S3 path.

S3Uploader.upload("analysis_config/bias_analysis_config.json", "s3://{}/{}".format(bucket, prefix))

INFO:botocore.credentials:Found credentials from IAM Role: BaseNotebookInstanceEc2InstanceRole

[16]:

's3://sagemaker-ap-south-1-000000000000/sagemaker/DEMO-sagemaker-clarify-boto3/bias_analysis_config.json'

Run SageMaker Clarify Processing Job

Refer this documentation to configure a clarify processing job for your use case.

[17]:

def create_processing_job(analysis_config_path, analysis_result_path):

processing_job_name = "DEMO-clarify-job-{}".format(datetime.now().strftime("%d-%m-%Y-%H-%M-%S"))

response = sagemaker_client.create_processing_job(

ProcessingJobName=processing_job_name,

AppSpecification={"ImageUri": clarify_image_uri},

ProcessingInputs=[

{

"InputName": "analysis_config",

"S3Input": {

"S3DataType": "S3Prefix",

"S3InputMode": "File",

"S3Uri": analysis_config_path,

"LocalPath": "/opt/ml/processing/input/config",

},

},

{

"InputName": "dataset",

"S3Input": {

"S3DataType": "S3Prefix",

"S3InputMode": "File",

"S3Uri": train_uri,

"LocalPath": "/opt/ml/processing/input/data",

},

},

],

ProcessingOutputConfig={

"Outputs": [

{

"OutputName": "analysis_result",

"S3Output": {

"S3Uri": analysis_result_path,

"LocalPath": "/opt/ml/processing/output",

"S3UploadMode": "EndOfJob",

},

}

]

},

ProcessingResources={

"ClusterConfig": {

"InstanceCount": 1,

"InstanceType": "ml.m5.xlarge",

"VolumeSizeInGB": 30,

}

},

StoppingCondition={

"MaxRuntimeInSeconds": 3600,

},

RoleArn=role,

)

return processing_job_name

Here is a brief explanation of inputs used above, for detailed documentation check CreateProcessingJob API reference:

AppSpecification: Here we provide the region specific clarify image uri we fetched earlierProcessingInputs: Clarify job requires that you provide two ProcessingInput parameters.InputName: analysis_config: The analysis configuration JSON file for a SageMaker Clarify job must be specified as an Amazon S3 object with the InputName “analysis_config”. We will be providing the example analysis_configs that we have provided with this notebook.InputName: dataset, dataset fetched earlier provided here as an Amazon S3 object.

ProcessingOutputConfig: The job also requires an output parameter, the output location as an Amazon S3 prefix with the OutputName “analysis_result”. The S3UploadMode should be set to “EndOfJob”, because the analysis results is generated at the end of the job. We will be providing here theanalysis_result_paththat we configured earlier.ProcessingResourcescontains the ClusterConfig specifying the ML compute instance type we want to use and the count. SageMaker SHAP analysis is CPU-intensive, to speed up the analysis, use a better instance type, or add more instances to enable Spark parallelization. The SageMaker Clarify job doesn’t use GPU.StoppingCondition: Using a maximum limit of 60 min for example job run. You can set the MaxRuntimeInSeconds of a SageMaker Clarify job to up to 7 days (604800 seconds). If the job cannot be completed within this time limit, it will be force-stopped and no analysis results are provided.

[18]:

# Wait for processing job to complete

def wait_for_job(job_name):

while (

sagemaker_client.describe_processing_job(ProcessingJobName=job_name)["ProcessingJobStatus"]

== "InProgress"

):

print(".", end="")

time.sleep(60)

print()

[19]:

# The job takes about 10 minutes to run

processing_job_name = create_processing_job(bias_analysis_config_path, bias_analysis_result_path)

wait_for_job(processing_job_name)

.......

Viewing the Bias Report

In Studio, you can view the results under the experiments tab.

Each bias metric has detailed explanations with examples that you can explore.

You could also summarize the results in a handy table!

If you’re not a Studio user yet, you can access the complete analysis report at the following S3 bucket.

[20]:

bias_analysis_result_path

[20]:

's3://sagemaker-ap-south-1-000000000000/sagemaker/DEMO-sagemaker-clarify-boto3/bias_analysis_output'

Explaining Predictions

There are expanding business needs and legislative regulations that require explanations of why a model made the decision it did. SageMaker Clarify uses Kernel SHAP to explain the contribution that each input feature makes to the final decision.

Configure a SageMaker Clarify Processing Job Container’s input and output parameters

[21]:

explainability_analysis_config_path = "s3://{}/{}/explainability_analysis_config.json".format(

bucket, prefix

)

explainability_analysis_result_path = "s3://{}/{}/explainability_analysis_output".format(

bucket, prefix

)

Configure analysis config

For our example use case we will be using the following analysis config:

[22]:

!echo

!cat analysis_config/explainability_analysis_config.json

{

"dataset_type": "text/csv",

"headers": ["Target", "Age", "Workclass", "fnlwgt", "Education", "Education-Num", "Marital Status", "Occupation", "Relationship", "Ethnic group", "Sex", "Capital Gain", "Capital Loss", "Hours per week", "Country"],

"label": "Target",

"methods": {

"shap": {

"baseline": [

[38, 2, 189794, 10, 10, 3, 6, 1, 4, 1, 1092, 88, 41, 36]

],

"num_samples": 15,

"agg_method": "mean_abs",

"use_logit": false,

"save_local_shap_values": true

},

"report": {

"name": "report",

"title": "Analysis Report"

}

},

"predictor": {

"model_name": "DEMO-clarify-xgboost-model",

"instance_type": "ml.m5.xlarge",

"initial_instance_count": 1,

"accept_type": "text/csv",

"content_type": "text/csv"

}

}

explainability_analysis_config.json here contains configuration values for computing feature attribution using a SageMaker Clarify job:

dataset_typespecify the format of your dataset, for this example as we are using csv dataset this will betext/csvheadersis the list of column names in the datasetlabelspecifies the ground truth label, in this example the “Target” column. The SageMaker Clarify job will drop the column and uses the remaining feature columns for explainability analysis.methodsis the list of methods and their parameters for the analyses and reports.shap:This section has the parameter for SHAP analysis. Kernel SHAP algorithm requires a baseline (also known as background dataset). If not provided, a baseline is calculated automatically by SageMaker Clarify using K-means or K-prototypes in the input dataset. Baseline dataset type shall be the same asdataset_type, and baseline samples shall only include features. By definition,baselineshould either be a S3 URI to the baseline dataset file, or an in-place list of samples. In this case we chose the latter, and put the mean of the train dataset to the list. For more details on baseline selection please refer this documentation.

predictorincludes model configuration, this section is required if the analysis requires predictions from modelmodel_name: name of the concerned model, using name of the xgboost model trained earlier,DEMO-clarify-xgboost-modelinstance_typeandinitial_instance_countspecify your preferred instance type and instance count used to run your model on during SageMaker Clarify’s processing. The testing dataset is small, so a single standard instance is good enough to run this example.accept_typedenotes the endpoint response payload format, andcontent_typedenotes the payload format of request to the endpoint. As per the example model we created above both of these will betext/csv

[23]:

# Upload the analysis_config to the concerned S3 path.

S3Uploader.upload(

"analysis_config/explainability_analysis_config.json", "s3://{}/{}".format(bucket, prefix)

)

INFO:botocore.credentials:Found credentials from IAM Role: BaseNotebookInstanceEc2InstanceRole

[23]:

's3://sagemaker-ap-south-1-000000000000/sagemaker/DEMO-sagemaker-clarify-boto3/explainability_analysis_config.json'

Run SageMaker Clarify Processing job

[24]:

# The job takes about 10 minutes to run

processing_job_name = create_processing_job(

explainability_analysis_config_path, explainability_analysis_result_path

)

wait_for_job(processing_job_name)

.............

Viewing the Explainability Report

As with the bias report, you can view the explainability report in Studio under the experiments tab.

The Model Insights tab contains direct links to the report and model insights.

If you’re not a Studio user yet, you can access the complete analysis report at the following S3 bucket.

[25]:

explainability_analysis_result_path

[25]:

's3://sagemaker-ap-south-1-000000000000/sagemaker/DEMO-sagemaker-clarify-boto3/explainability_analysis_output'

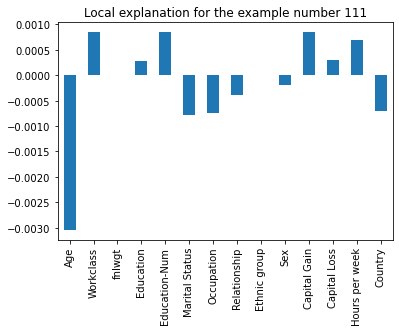

Analysis of local explanations

It is possible to visualize the local explanations for single examples in your dataset. You can use the obtained results from running Kernel SHAP algorithm for global explanations.

You can simply load the local explanations stored in your output path, and visualize the explanation (i.e., the impact that the single features have on the prediction of your model) for any single example.

[26]:

local_explanations_out = pd.read_csv(

explainability_analysis_result_path + "/explanations_shap/out.csv"

)

feature_names = [str.replace(c, "_label0", "") for c in local_explanations_out.columns.to_series()]

local_explanations_out.columns = feature_names

selected_example = 111

print(

"Example number:",

selected_example,

"\nwith model prediction:",

sum(local_explanations_out.iloc[selected_example]) > 0,

)

print("\nFeature values -- Label", training_data.iloc[selected_example])

local_explanations_out.iloc[selected_example].plot(

kind="bar", title="Local explanation for the example number " + str(selected_example), rot=90

)

INFO:botocore.credentials:Found credentials from IAM Role: BaseNotebookInstanceEc2InstanceRole

Example number: 111

with model prediction: False

Feature values -- Label Target 0

Age 21

Workclass 2

fnlwgt 199915

Education 15

Education-Num 10

Marital Status 4

Occupation 7

Relationship 3

Ethnic group 4

Sex 0

Capital Gain 0

Capital Loss 0

Hours per week 40

Country 38

Name: 120, dtype: int64

[26]:

<AxesSubplot:title={'center':'Local explanation for the example number 111'}>

Clean Up

Finally, don’t forget to clean up the resources we set up and used for this demo!

[27]:

sagemaker_client.delete_model(ModelName=model_name)

[27]:

{'ResponseMetadata': {'RequestId': '00748684-073e-4201-8186-f9603a8ebe3b',

'HTTPStatusCode': 200,

'HTTPHeaders': {'x-amzn-requestid': '00748684-073e-4201-8186-f9603a8ebe3b',

'content-type': 'application/x-amz-json-1.1',

'content-length': '0',

'date': 'Thu, 19 Jan 2023 01:29:06 GMT'},

'RetryAttempts': 0}}

Notebook CI Test Results

This notebook was tested in multiple regions. The test results are as follows, except for us-west-2 which is shown at the top of the notebook.