Hugging Face Sentiment Classification

This notebook’s CI test result for us-west-2 is as follows. CI test results in other regions can be found at the end of the notebook.

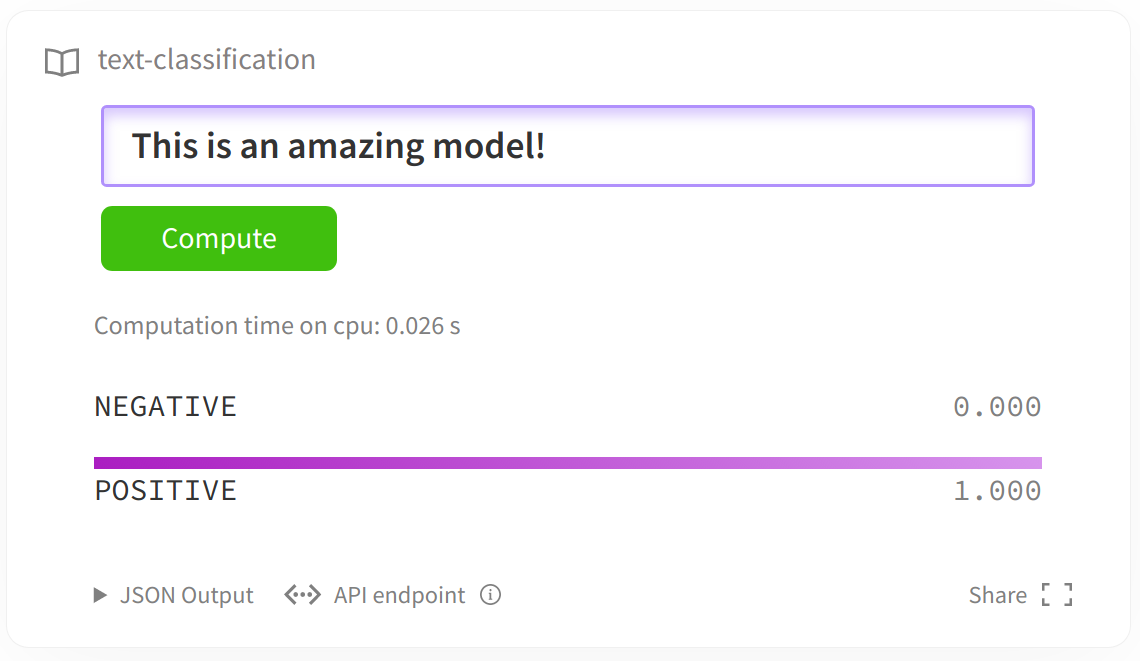

Binary Classification with Trainer and sst2 dataset

Runtime

This notebook takes approximately 45 minutes to run.

Contents

Introduction

Welcome to our end-to-end binary text classification example. This notebook uses Hugging Face’s transformers library with a custom Amazon sagemaker-sdk extension to fine-tune a pre-trained transformer on binary text classification. The pre-trained model is fine-tuned using the sst2 dataset. To get started, we need to set up the environment with a few prerequisite steps for permissions, configurations, and so on.

This notebook is adapted from Hugging Face’s notebook Huggingface Sagemaker-sdk - Getting Started Demo and provided here courtesy of Hugging Face.

Runtime

This notebook takes approximately 40 minutes to run.

NOTE: You can run this notebook in SageMaker Studio, a SageMaker notebook instance, or your local machine. This notebook was tested in a notebook instance using the conda_pytorch_p36 kernel.

Development environment and permissions

Installation

Note: We install the required libraries from Hugging Face and AWS. You also need PyTorch, if you haven’t installed it already.

[2]:

!pip install "sagemaker" "transformers" "datasets[s3]" "s3fs" --upgrade

Requirement already satisfied: sagemaker in /opt/conda/lib/python3.6/site-packages (2.69.1.dev0)

Collecting sagemaker

Downloading sagemaker-2.86.2.tar.gz (521 kB)

|████████████████████████████████| 521 kB 6.6 MB/s eta 0:00:01

...

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

thinc 8.0.2 requires typing-extensions<4.0.0.0,>=3.7.4.1; python_version < "3.8", but you have typing-extensions 4.1.1 which is incompatible.

spacy 3.0.5 requires typing-extensions<4.0.0.0,>=3.7.4; python_version < "3.8", but you have typing-extensions 4.1.1 which is incompatible.

awscli 1.22.7 requires botocore==1.23.7, but you have botocore 1.23.24 which is incompatible.

Successfully installed aiobotocore-2.1.2 aiohttp-3.8.1 aioitertools-0.10.0 aiosignal-1.2.0 async-timeout-4.0.2 asynctest-0.13.0 boto3-1.20.24 botocore-1.23.24 charset-normalizer-2.0.12 datasets-2.1.0 filelock-3.4.1 frozenlist-1.2.0 fsspec-2022.1.0 huggingface-hub-0.4.0 idna-ssl-1.1.0 importlib-resources-5.4.0 multidict-5.2.0 regex-2022.3.15 responses-0.17.0 s3fs-2022.1.0 sacremoses-0.0.49 sagemaker-2.86.2 tokenizers-0.12.1 tqdm-4.64.0 transformers-4.18.0 typing-extensions-4.1.1 wrapt-1.14.0 xxhash-3.0.0 yarl-1.7.2

Development environment

[3]:

import sagemaker.huggingface

Permissions

If you are going to use SageMaker in a local environment, you need access to an IAM Role with the required permissions for SageMaker. You can read more atSageMaker Roles.

[4]:

import sagemaker

sess = sagemaker.Session()

# The SageMaker session bucket is used for uploading data, models and logs

# SageMaker will automatically create this bucket if it doesn't exist

sagemaker_session_bucket = None

if sagemaker_session_bucket is None and sess is not None:

# Set to default bucket if a bucket name is not given

sagemaker_session_bucket = sess.default_bucket()

role = sagemaker.get_execution_role()

sess = sagemaker.Session(default_bucket=sagemaker_session_bucket)

print(f"Role arn: {role}")

print(f"Bucket: {sess.default_bucket()}")

print(f"Region: {sess.boto_region_name}")

Role arn: arn:aws:iam::000000000000:role/ProdBuildSystemStack-ReleaseBuildRoleFB326D49-QK8LUA2UI1IC

Bucket: sagemaker-us-west-2-521695447989

Region: us-west-2

Pre-processing

We use the datasets library to pre-process the sst2 dataset (Stanford Sentiment Treebank). After pre-processing, the dataset is uploaded to the sagemaker_session_bucket for use within the training job. The sst2 dataset consists of 67349 training samples and _ testing samples of highly polar movie reviews.

Download the dataset

[5]:

from datasets import Dataset

from transformers import AutoTokenizer

import pandas as pd

# Tokenizer used in pre-processing

tokenizer_name = "distilbert-base-uncased"

# S3 key prefix for the data

s3_prefix = "DEMO-samples/datasets/sst"

# Download the SST2 data from s3

!curl https://sagemaker-sample-files.s3.amazonaws.com/datasets/text/SST2/sst2.test > ./sst2.test

!curl https://sagemaker-sample-files.s3.amazonaws.com/datasets/text/SST2/sst2.train > ./sst2.train

!curl https://sagemaker-sample-files.s3.amazonaws.com/datasets/text/SST2/sst2.val > ./sst2.val

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 189k 100 189k 0 0 58903 0 0:00:03 0:00:03 --:--:-- 58917

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 3716k 100 3716k 0 0 2194k 0 0:00:01 0:00:01 --:--:-- 2195k

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 94916 100 94916 0 0 80806 0 0:00:01 0:00:01 --:--:-- 80848

Tokenize sentences

[6]:

# Download tokenizer

tokenizer = AutoTokenizer.from_pretrained(tokenizer_name)

# Tokenizer helper function

def tokenize(batch):

return tokenizer(batch["text"], padding="max_length", truncation=True)

# Load dataset

test_df = pd.read_csv("sst2.test", sep="delimiter", header=None, engine="python", names=["line"])

train_df = pd.read_csv("sst2.train", sep="delimiter", header=None, engine="python", names=["line"])

test_df[["label", "text"]] = test_df["line"].str.split(" ", 1, expand=True)

train_df[["label", "text"]] = train_df["line"].str.split(" ", 1, expand=True)

test_df.drop("line", axis=1, inplace=True)

train_df.drop("line", axis=1, inplace=True)

test_df["label"] = pd.to_numeric(test_df["label"], downcast="integer")

train_df["label"] = pd.to_numeric(train_df["label"], downcast="integer")

train_dataset = Dataset.from_pandas(train_df)

test_dataset = Dataset.from_pandas(test_df)

# Tokenize dataset

train_dataset = train_dataset.map(tokenize, batched=True)

test_dataset = test_dataset.map(tokenize, batched=True)

# Set format for pytorch

train_dataset = train_dataset.rename_column("label", "labels")

train_dataset.set_format("torch", columns=["input_ids", "attention_mask", "labels"])

test_dataset = test_dataset.rename_column("label", "labels")

test_dataset.set_format("torch", columns=["input_ids", "attention_mask", "labels"])

Upload data to sagemaker_session_bucket

After processing the datasets, we use the FileSystem integration to upload the dataset to S3.

[7]:

import botocore

from datasets.filesystems import S3FileSystem

s3 = S3FileSystem()

# save train_dataset to s3

training_input_path = f"s3://{sess.default_bucket()}/{s3_prefix}/train"

train_dataset.save_to_disk(training_input_path, fs=s3)

# save test_dataset to s3

test_input_path = f"s3://{sess.default_bucket()}/{s3_prefix}/test"

test_dataset.save_to_disk(test_input_path, fs=s3)

Fine-tune the model and start a SageMaker training job

In order to create a SageMaker training job, we need a HuggingFace Estimator. The Estimator handles end-to-end Amazon SageMaker training and deployment tasks. In an Estimator, we define which fine-tuning script should be used as entry_point, which instance_type should be used, which hyperparameters are passed in, etc:

hf_estimator = HuggingFace(entry_point="train.py",

source_dir="./scripts",

base_job_name="huggingface-sdk-extension",

instance_type="ml.p3.2xlarge",

instance_count=1,

transformers_version="4.4",

pytorch_version="1.6",

py_version="py36",

role=role,

hyperparameters = {"epochs": 1,

"train_batch_size": 32,

"model_name":"distilbert-base-uncased"

})

When we create a SageMaker training job, SageMaker takes care of starting and managing all the required EC2 instances for us with the huggingface container, uploads the provided fine-tuning script train.py, and downloads the data from the sagemaker_session_bucket into the container at /opt/ml/input/data. Then, it starts the training job by running:

/opt/conda/bin/python train.py --epochs 1 --model_name distilbert-base-uncased --train_batch_size 32

The hyperparameters defined in the HuggingFace estimator are passed in as named arguments.

SageMaker provides useful properties about the training environment through various environment variables, including the following:

SM_MODEL_DIR: A string representing the path where the training job writes the model artifacts to. After training, artifacts in this directory are uploaded to S3 for model hosting.SM_NUM_GPUS: An integer representing the number of GPUs available to the host.SM_CHANNEL_XXXX:A string representing the path to the directory that contains the input data for the specified channel. For example, if you specify two input channels in the Hugging Face estimator’sfit()call, namedtrainandtest, the environment variablesSM_CHANNEL_TRAINandSM_CHANNEL_TESTare set.

To run the training job locally, you can define instance_type="local" or instance_type="local_gpu" for GPU usage.

Note: local mode is not supported in SageMaker Studio.

[8]:

!pygmentize ./scripts/train.py

from transformers import AutoModelForSequenceClassification, Trainer, TrainingArguments, AutoTokenizer

from sklearn.metrics import accuracy_score, precision_recall_fscore_support

from datasets import load_from_disk

import random

import logging

import sys

import argparse

import os

import torch

if __name__ == "__main__":

parser = argparse.ArgumentParser()

# Hyperparameters sent by the client are passed as command-line arguments to the script

parser.add_argument("--epochs", type=int, default=3)

parser.add_argument("--train_batch_size", type=int, default=32)

parser.add_argument("--eval_batch_size", type=int, default=64)

parser.add_argument("--warmup_steps", type=int, default=500)

parser.add_argument("--model_name", type=str)

parser.add_argument("--learning_rate", type=str, default=5e-5)

# Data, model, and output directories

parser.add_argument("--output_data_dir", type=str, default=os.environ["SM_OUTPUT_DATA_DIR"])

parser.add_argument("--model_dir", type=str, default=os.environ["SM_MODEL_DIR"])

parser.add_argument("--n_gpus", type=str, default=os.environ["SM_NUM_GPUS"])

parser.add_argument("--training_dir", type=str, default=os.environ["SM_CHANNEL_TRAIN"])

parser.add_argument("--test_dir", type=str, default=os.environ["SM_CHANNEL_TEST"])

args, _ = parser.parse_known_args()

# Set up logging

logger = logging.getLogger(__name__)

logging.basicConfig(

level=logging.getLevelName("INFO"),

handlers=[logging.StreamHandler(sys.stdout)],

format="%(asctime)s - %(name)s - %(levelname)s - %(message)s",

)

# Load datasets

train_dataset = load_from_disk(args.training_dir)

test_dataset = load_from_disk(args.test_dir)

logger.info(f"Loaded train_dataset length is: {len(train_dataset)}")

logger.info(f"Loaded test_dataset length is: {len(test_dataset)}")

# Compute metrics function for binary classification

def compute_metrics(pred):

labels = pred.label_ids

preds = pred.predictions.argmax(-1)

precision, recall, f1, _ = precision_recall_fscore_support(labels, preds, average="binary")

acc = accuracy_score(labels, preds)

return {"accuracy": acc, "f1": f1, "precision": precision, "recall": recall}

# Download model from model hub

model = AutoModelForSequenceClassification.from_pretrained(args.model_name)

tokenizer = AutoTokenizer.from_pretrained(args.model_name)

# Define training args

training_args = TrainingArguments(

output_dir=args.model_dir,

num_train_epochs=args.epochs,

per_device_train_batch_size=args.train_batch_size,

per_device_eval_batch_size=args.eval_batch_size,

warmup_steps=args.warmup_steps,

evaluation_strategy="epoch",

logging_dir=f"{args.output_data_dir}/logs",

learning_rate=float(args.learning_rate),

)

# Create Trainer instance

trainer = Trainer(

model=model,

args=training_args,

compute_metrics=compute_metrics,

train_dataset=train_dataset,

eval_dataset=test_dataset,

tokenizer=tokenizer,

)

# Train model

trainer.train()

# Evaluate model

eval_result = trainer.evaluate(eval_dataset=test_dataset)

# Write eval result to file which can be accessed later in S3 ouput

with open(os.path.join(args.output_data_dir, "eval_results.txt"), "w") as writer:

print(f"***** Eval results *****")

for key, value in sorted(eval_result.items()):

writer.write(f"{key} = {value}\n")

# Save the model to s3

trainer.save_model(args.model_dir)

Create an Estimator and start a training job

[9]:

from sagemaker.huggingface import HuggingFace

# Hyperparameters which are passed into the training job

hyperparameters = {"epochs": 1, "train_batch_size": 32, "model_name": "distilbert-base-uncased"}

[10]:

hf_estimator = HuggingFace(

entry_point="train.py",

source_dir="./scripts",

instance_type="ml.p3.2xlarge",

instance_count=1,

role=role,

transformers_version="4.12",

pytorch_version="1.9",

py_version="py38",

hyperparameters=hyperparameters,

)

[11]:

# Start the training job with the uploaded dataset as input

hf_estimator.fit({"train": training_input_path, "test": test_input_path})

2022-04-18 00:28:15 Starting - Starting the training job...ProfilerReport-1650241694: InProgress

...

2022-04-18 00:28:58 Starting - Preparing the instances for training......

2022-04-18 00:30:07 Downloading - Downloading input data

2022-04-18 00:30:07 Training - Downloading the training image................................bash: cannot set terminal process group (-1): Inappropriate ioctl for device

bash: no job control in this shell

2022-04-18 00:35:22,153 sagemaker-training-toolkit INFO Imported framework sagemaker_pytorch_container.training

2022-04-18 00:35:22,181 sagemaker_pytorch_container.training INFO Block until all host DNS lookups succeed.

2022-04-18 00:35:22,187 sagemaker_pytorch_container.training INFO Invoking user training script.

2022-04-18 00:35:22,707 sagemaker-training-toolkit INFO Invoking user script

...

***** Running Evaluation *****

***** Running Evaluation *****

Num examples = 1821

Num examples = 1821

Batch size = 64

Batch size = 64

0%| | 0/29 [00:00<?, ?it/s]

7%|▋ | 2/29 [00:00<00:04, 5.81it/s]

10%|█ | 3/29 [00:00<00:06, 4.14it/s]

14%|█▍ | 4/29 [00:01<00:06, 3.60it/s]

17%|█▋ | 5/29 [00:01<00:07, 3.35it/s]

21%|██ | 6/29 [00:01<00:07, 3.21it/s]

24%|██▍ | 7/29 [00:02<00:07, 3.13it/s]

28%|██▊ | 8/29 [00:02<00:06, 3.06it/s]

31%|███ | 9/29 [00:02<00:06, 3.02it/s]

34%|███▍ | 10/29 [00:03<00:06, 3.00it/s]

38%|███▊ | 11/29 [00:03<00:06, 2.99it/s]

41%|████▏ | 12/29 [00:03<00:05, 2.98it/s]

45%|████▍ | 13/29 [00:04<00:05, 2.96it/s]

48%|████▊ | 14/29 [00:04<00:05, 2.96it/s]

52%|█████▏ | 15/29 [00:04<00:04, 2.96it/s]

55%|█████▌ | 16/29 [00:05<00:04, 2.96it/s]

59%|█████▊ | 17/29 [00:05<00:04, 2.96it/s]

62%|██████▏ | 18/29 [00:05<00:03, 2.96it/s]

66%|██████▌ | 19/29 [00:06<00:03, 2.96it/s]

69%|██████▉ | 20/29 [00:06<00:03, 2.96it/s]

72%|███████▏ | 21/29 [00:06<00:02, 2.96it/s]

76%|███████▌ | 22/29 [00:07<00:02, 2.96it/s]

79%|███████▉ | 23/29 [00:07<00:02, 2.96it/s]

83%|████████▎ | 24/29 [00:07<00:01, 2.96it/s]

86%|████████▌ | 25/29 [00:08<00:01, 2.95it/s]

90%|████████▉ | 26/29 [00:08<00:01, 2.95it/s]

93%|█████████▎| 27/29 [00:08<00:00, 2.93it/s]

97%|█████████▋| 28/29 [00:09<00:00, 2.91it/s]

100%|██████████| 29/29 [00:09<00:00, 3.46it/s]

100%|██████████| 29/29 [00:09<00:00, 3.11it/s]

***** Eval results *****

Saving model checkpoint to /opt/ml/model

Saving model checkpoint to /opt/ml/model

Configuration saved in /opt/ml/model/config.json

Configuration saved in /opt/ml/model/config.json

Model weights saved in /opt/ml/model/pytorch_model.bin

Model weights saved in /opt/ml/model/pytorch_model.bin

tokenizer config file saved in /opt/ml/model/tokenizer_config.json

tokenizer config file saved in /opt/ml/model/tokenizer_config.json

Special tokens file saved in /opt/ml/model/special_tokens_map.json

Special tokens file saved in /opt/ml/model/special_tokens_map.json

2022-04-18 00:53:15,602 sagemaker-training-toolkit INFO Reporting training SUCCESS

2022-04-18 00:53:15,602 asyncio WARNING Loop <_UnixSelectorEventLoop running=False closed=True debug=False> that handles pid 24 is closed

2022-04-18 00:53:44 Uploading - Uploading generated training model

2022-04-18 00:59:55 Completed - Training job completed

Training seconds: 1803

Billable seconds: 1803

Deploy the endpoint

To deploy the endpoint, call deploy() on the HuggingFace estimator object, passing in the desired number of instances and instance type.

[12]:

predictor = hf_estimator.deploy(1, "ml.p3.2xlarge")

--------------!

Then use the returned predictor object to perform inference.

[13]:

sentiment_input = {"inputs": "I love using the new Inference DLC."}

predictor.predict(sentiment_input)

[13]:

[{'label': 'LABEL_1', 'score': 0.9633631706237793}]

We see that the fine-tuned model classifies the test sentence “I love using the new Inference DLC.” as having positive sentiment with 98% probability!

Finally, delete the endpoint.

[14]:

predictor.delete_endpoint()

Extras

Estimator Parameters

[15]:

print(f"Container image used for training job: \n{hf_estimator.image_uri}\n")

print(f"S3 URI where the trained model is located: \n{hf_estimator.model_data}\n")

print(f"Latest training job name for this estimator: \n{hf_estimator.latest_training_job.name}\n")

Container image used for training job:

None

S3 URI where the trained model is located:

s3://sagemaker-us-west-2-000000000000/huggingface-pytorch-training-2022-04-18-00-28-14-084/output/model.tar.gz

Latest training job name for this estimator:

huggingface-pytorch-training-2022-04-18-00-28-14-084

[16]:

hf_estimator.sagemaker_session.logs_for_job(hf_estimator.latest_training_job.name)

2022-04-18 01:00:05 Starting - Preparing the instances for training

2022-04-18 01:00:05 Downloading - Downloading input data

2022-04-18 01:00:05 Training - Training image download completed. Training in progress.

2022-04-18 01:00:05 Uploading - Uploading generated training model

2022-04-18 01:00:05 Completed - Training job completedbash: cannot set terminal process group (-1): Inappropriate ioctl for device

bash: no job control in this shell

2022-04-18 00:35:22,153 sagemaker-training-toolkit INFO Imported framework sagemaker_pytorch_container.training

2022-04-18 00:35:22,181 sagemaker_pytorch_container.training INFO Block until all host DNS lookups succeed.

2022-04-18 00:35:22,187 sagemaker_pytorch_container.training INFO Invoking user training script.

2022-04-18 00:35:22,707 sagemaker-training-toolkit INFO Invoking user script

...

***** Running Evaluation *****

***** Running Evaluation *****

Num examples = 1821

Num examples = 1821

Batch size = 64

Batch size = 64

0%| | 0/29 [00:00<?, ?it/s]

7%|▋ | 2/29 [00:00<00:04, 5.81it/s]

10%|█ | 3/29 [00:00<00:06, 4.14it/s]

14%|█▍ | 4/29 [00:01<00:06, 3.60it/s]

17%|█▋ | 5/29 [00:01<00:07, 3.35it/s]

21%|██ | 6/29 [00:01<00:07, 3.21it/s]

24%|██▍ | 7/29 [00:02<00:07, 3.13it/s]

28%|██▊ | 8/29 [00:02<00:06, 3.06it/s]

31%|███ | 9/29 [00:02<00:06, 3.02it/s]

34%|███▍ | 10/29 [00:03<00:06, 3.00it/s]

38%|███▊ | 11/29 [00:03<00:06, 2.99it/s]

41%|████▏ | 12/29 [00:03<00:05, 2.98it/s]

45%|████▍ | 13/29 [00:04<00:05, 2.96it/s]

48%|████▊ | 14/29 [00:04<00:05, 2.96it/s]

52%|█████▏ | 15/29 [00:04<00:04, 2.96it/s]

55%|█████▌ | 16/29 [00:05<00:04, 2.96it/s]

59%|█████▊ | 17/29 [00:05<00:04, 2.96it/s]

62%|██████▏ | 18/29 [00:05<00:03, 2.96it/s]

66%|██████▌ | 19/29 [00:06<00:03, 2.96it/s]

69%|██████▉ | 20/29 [00:06<00:03, 2.96it/s]

72%|███████▏ | 21/29 [00:06<00:02, 2.96it/s]

76%|███████▌ | 22/29 [00:07<00:02, 2.96it/s]

79%|███████▉ | 23/29 [00:07<00:02, 2.96it/s]

83%|████████▎ | 24/29 [00:07<00:01, 2.96it/s]

86%|████████▌ | 25/29 [00:08<00:01, 2.95it/s]

90%|████████▉ | 26/29 [00:08<00:01, 2.95it/s]

93%|█████████▎| 27/29 [00:08<00:00, 2.93it/s]

97%|█████████▋| 28/29 [00:09<00:00, 2.91it/s]

100%|██████████| 29/29 [00:09<00:00, 3.46it/s]

100%|██████████| 29/29 [00:09<00:00, 3.11it/s]

***** Eval results *****

Saving model checkpoint to /opt/ml/model

Saving model checkpoint to /opt/ml/model

Configuration saved in /opt/ml/model/config.json

Configuration saved in /opt/ml/model/config.json

Model weights saved in /opt/ml/model/pytorch_model.bin

Model weights saved in /opt/ml/model/pytorch_model.bin

tokenizer config file saved in /opt/ml/model/tokenizer_config.json

tokenizer config file saved in /opt/ml/model/tokenizer_config.json

Special tokens file saved in /opt/ml/model/special_tokens_map.json

Special tokens file saved in /opt/ml/model/special_tokens_map.json

2022-04-18 00:53:15,602 sagemaker-training-toolkit INFO Reporting training SUCCESS

2022-04-18 00:53:15,602 asyncio WARNING Loop <_UnixSelectorEventLoop running=False closed=True debug=False> that handles pid 24 is closed

Attach a previous training job to an estimator

In SageMaker, you can attach a previous training job to an estimator to continue training, get results, etc.

[17]:

from sagemaker.estimator import Estimator

# Uncomment the following lines and supply your training job name

# old_training_job_name = "<your-training-job-name>"

# hf_estimator_loaded = Estimator.attach(old_training_job_name)

# hf_estimator_loaded.model_data

Notebook CI Test Results

This notebook was tested in multiple regions. The test results are as follows, except for us-west-2 which is shown at the top of the notebook.