[ ]:

# Install dependencies

!apt-get update

!apt-get install ffmpeg libsm6 libxext6 -y

!pip install -q smdebug

!pip install -q seaborn

!pip install -q plotly

!pip install -q opencv-python

!pip install -q shap

!pip install -q bokeh

!pip install -q imageio

Using SageMaker Neo to Compile a Tensorflow U-Net Model

This notebook’s CI test result for us-west-2 is as follows. CI test results in other regions can be found at the end of the notebook.

SageMaker Neo makes it easy to compile pre-trained TensorFlow models and build an inference optimized container without the need for any custom model serving or inference code.

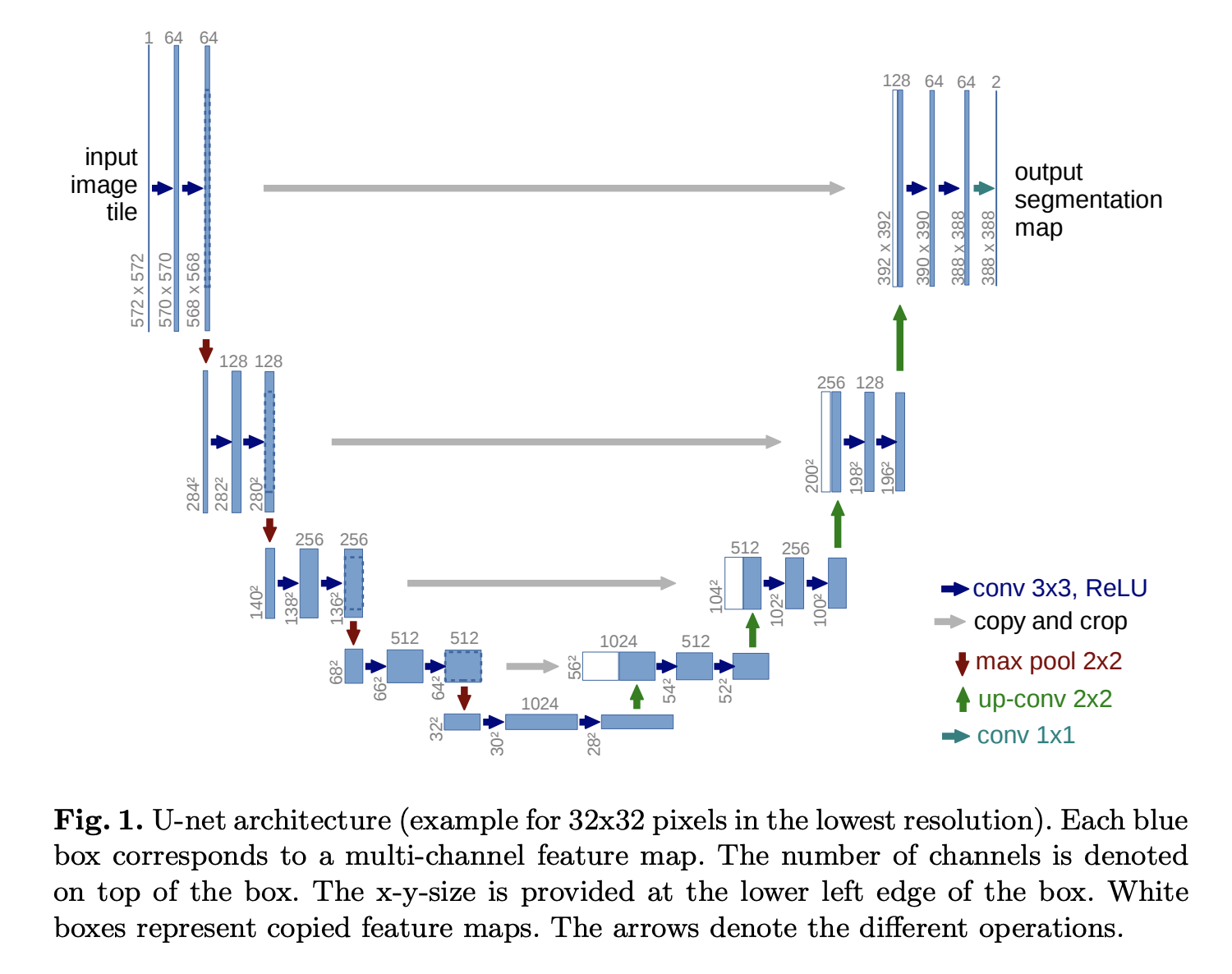

U-Net is an architecture for semantic segmentation. It’s a popular model for biological images including Ultrasound, Microscopy, CT, MRI and more.

In this example, we will show how deploy a pre-trained U-Net model to a SageMaker Endpoint with Neo compilation using the SageMaker Python SDK, and then use the models to perform inference requests. We also provide a performance comparison so you can see the benefits of model compilation.

Setup

First, we need to ensure we have SageMaker Python SDK 1.x and Tensorflow 1.15.x. Then, import necessary Python packages.

[ ]:

!pip install -U --quiet --upgrade "sagemaker"

!pip install -U --quiet "tensorflow==1.15.3"

[ ]:

import tarfile

import numpy as np

import sagemaker

import time

from sagemaker.utils import name_from_base

Next, we’ll get the IAM execution role and a few other SageMaker specific variables from our notebook environment, so that SageMaker can access resources in your AWS account later in the example.

[ ]:

from sagemaker import get_execution_role

from sagemaker.session import Session

role = get_execution_role()

sess = Session()

region = sess.boto_region_name

bucket = sess.default_bucket()

SageMaker Neo supports Tensorflow 1.15.x. Check your version of Tensorflow to prevent downstream framework errors.

[ ]:

import tensorflow as tf

print(tf.__version__) # This notebook runs on TensorFlow 1.15.x or earlier

Download U-Net Model

The SageMaker Neo TensorFlow Serving Container works with any model stored in TensorFlow’s SavedModel format. This could be the output of your own training job or a model trained elsewhere. For this example, we will use a pre-trained version of the U-Net model based on this repo.

[ ]:

model_name = "unet_medical"

export_path = "export"

model_archive_name = "unet-medical.tar.gz"

model_archive_url = "https://sagemaker-neo-artifacts.s3.us-east-2.amazonaws.com/{}".format(

model_archive_name

)

[ ]:

!wget {model_archive_url}

The pre-trained model and its artifacts are saved in a compressed tar file (.tar.gz) so unzip first with:

[ ]:

!tar -xvzf unet-medical.tar.gz

After downloading the model, we can inspect it using TensorFlow’s saved_model_cli command. In the command output, you should see

MetaGraphDef with tag-set: 'serve' contains the following SignatureDefs:

signature_def['serving_default']:

...

The command output should also show details of the model inputs and outputs.

[ ]:

import os

model_path = os.path.join(export_path, "Servo/1")

!saved_model_cli show --all --dir {model_path}

Next we need to create a model archive file containing the exported model.

Upload the model archive file to S3

We now have a suitable model archive ready in our notebook. We need to upload it to S3 before we can create a SageMaker Model that. We’ll use the SageMaker Python SDK to handle the upload.

[ ]:

model_data = Session().upload_data(path=model_archive_name, key_prefix="model")

print("model uploaded to: {}".format(model_data))

Create a SageMaker Model and Endpoint

Now that the model archive is in S3, we can create an unoptimized Model and deploy it to an Endpoint.

[ ]:

from sagemaker.tensorflow.serving import Model

instance_type = "ml.c4.xlarge"

framework = "TENSORFLOW"

framework_version = "1.15.3"

[ ]:

sm_model = Model(model_data=model_data, framework_version=framework_version, role=role)

uncompiled_predictor = sm_model.deploy(initial_instance_count=1, instance_type=instance_type)

Make predictions using the endpoint

The endpoint is now up and running, and ready to handle inference requests. The deploy call above returned a predictor object. The predict method of this object handles sending requests to the endpoint. It also automatically handles JSON serialization of our input arguments, and JSON deserialization of the prediction results.

We’ll use this sample image:

[ ]:

sample_img_fname = "cell-4.png"

sample_img_url = "https://sagemaker-neo-artifacts.s3.us-east-2.amazonaws.com/{}".format(

sample_img_fname

)

[ ]:

!wget {sample_img_url}

[ ]:

# read the image file into a tensor (numpy array)

import cv2

image = cv2.imread(sample_img_fname)

original_shape = image.shape

[ ]:

import matplotlib.pyplot as plt

plt.imshow(image, cmap="gray", interpolation="none")

plt.show()

[ ]:

image = np.resize(image, (256, 256, 3))

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image = np.asarray(image)

image = np.expand_dims(image, axis=0)

[ ]:

start_time = time.time()

# get a prediction from the endpoint

# the image input is automatically converted to a JSON request.

# the JSON response from the endpoint is returned as a python dict

result = uncompiled_predictor.predict(image)

print("Prediction took %.2f seconds" % (time.time() - start_time))

[ ]:

# show the predicted segmentation image

cutoff = 0.4

segmentation_img = np.squeeze(np.asarray(result["predictions"])) > cutoff

segmentation_img = segmentation_img.astype(np.uint8)

segmentation_img = np.resize(segmentation_img, (original_shape[0], original_shape[1]))

plt.imshow(segmentation_img, "gray")

plt.show()

Uncompiled Predictor Performance

[ ]:

shape_input = np.random.rand(1, 256, 256, 3)

uncompiled_results = []

for _ in range(100):

start = time.time()

uncompiled_predictor.predict(image)

uncompiled_results.append((time.time() - start) * 1000)

print("\nPredictions for un-compiled model: \n")

print("\nP95: " + str(np.percentile(uncompiled_results, 95)) + " ms\n")

print("P90: " + str(np.percentile(uncompiled_results, 90)) + " ms\n")

print("P50: " + str(np.percentile(uncompiled_results, 50)) + " ms\n")

print("Average: " + str(np.average(uncompiled_results)) + " ms\n")

Compile model using SageMaker Neo

[ ]:

# Replace the value of data_shape below and

# specify the name & shape of the expected inputs for your trained model in JSON

# Note that -1 is replaced with 1 for the batch size placeholder

data_shape = {"inputs": [1, 224, 224, 3]}

instance_family = "ml_c4"

compilation_job_name = name_from_base("medical-tf-Neo")

# output path for compiled model artifact

compiled_model_path = "s3://{}/{}/output".format(bucket, compilation_job_name)

[ ]:

optimized_estimator = sm_model.compile(

target_instance_family=instance_family,

input_shape=data_shape,

job_name=compilation_job_name,

role=role,

framework=framework.lower(),

framework_version=framework_version,

output_path=compiled_model_path,

)

Create Optimized Endpoint

[ ]:

optimized_predictor = optimized_estimator.deploy(

initial_instance_count=1, instance_type=instance_type

)

[ ]:

start_time = time.time()

# get a prediction from the endpoint

# the image input is automatically converted to a JSON request.

# the JSON response from the endpoint is returned as a python dict

result = optimized_predictor.predict(image)

print("Prediction took %.2f seconds" % (time.time() - start_time))

Compiled Predictor Performance

[ ]:

compiled_results = []

test_input = {"instances": np.asarray(shape_input).tolist()}

# Warmup inference.

optimized_predictor.predict(image)

# Inferencing 100 times.

for _ in range(100):

start = time.time()

optimized_predictor.predict(image)

compiled_results.append((time.time() - start) * 1000)

print("\nPredictions for compiled model: \n")

print("\nP95: " + str(np.percentile(compiled_results, 95)) + " ms\n")

print("P90: " + str(np.percentile(compiled_results, 90)) + " ms\n")

print("P50: " + str(np.percentile(compiled_results, 50)) + " ms\n")

print("Average: " + str(np.average(compiled_results)) + " ms\n")

Performance Comparison

Here we compare inference speed up provided by SageMaker Neo. P90 is 90th percentile latency. We add this because it represents the tail of the latency distribution (worst case). More information on latency percentiles here.

[ ]:

p90 = np.percentile(uncompiled_results, 90) / np.percentile(compiled_results, 90)

p50 = np.percentile(uncompiled_results, 50) / np.percentile(compiled_results, 50)

avg = np.average(uncompiled_results) / np.average(compiled_results)

print("P90 Speedup: %.2f" % p90)

print("P50 Speedup: %.2f" % p50)

print("Average Speedup: %.2f" % avg)

Additional Information

Cleaning up

To avoid incurring charges to your AWS account for the resources used in this tutorial, you need to delete the SageMaker Endpoint.

[ ]:

uncompiled_predictor.delete_endpoint()

[ ]:

optimized_predictor.delete_endpoint()

Notebook CI Test Results

This notebook was tested in multiple regions. The test results are as follows, except for us-west-2 which is shown at the top of the notebook.