Amazon Augmented AI(A2I) Integrated with AWS Marketplace ML Models

This notebook’s CI test result for us-west-2 is as follows. CI test results in other regions can be found at the end of the notebook.

Sometimes, for some payloads, machine learning (ML) model predictions are just not confident enough and you want more than a machine. Furthermore, training a model can be complicated, time-consuming, and expensive. This is where AWS Marketplace and Amazon Augmented AI (Amazon A2I) come in. By combining a pretrained ML model in AWS Marketplace with Amazon Augmented AI, you can quickly reap the benefits of pretrained models with validating and augmenting the model’s accuracy with human intelligence.

AWS Marketplace contains over 400 pretrained ML models. Some models are general purpose. For example, the GluonCV SSD Object Detector can detect objects in an image and place bounding boxes around the objects. AWS Marketplace also offers many purpose-built models such as a Background Noise Classifier, a Hard Hat Detector for Worker Safety, and a Person in Water.

Amazon A2I provides a human-in-loop workflow to review ML predictions. Its configurable human-review workflow solution and customizable user-review console enable you to focus on ML tasks and increase the accuracy of the predictions with human input.

Overview

In this notebook, you will use a pre-trained AWS Marketplace machine learning model with Amazon A2I to detect images as well as trigger a human-in-loop workflow to review, update and add additional labeled objects to an individual image. Furthermore, you can specify configurable threshold criteria for triggering the human-in-loop workflow in Amazon A2I. For example, you can trigger a human-in-loop workflow if there are no objects that are detected with an accuracy of 90% or greater.

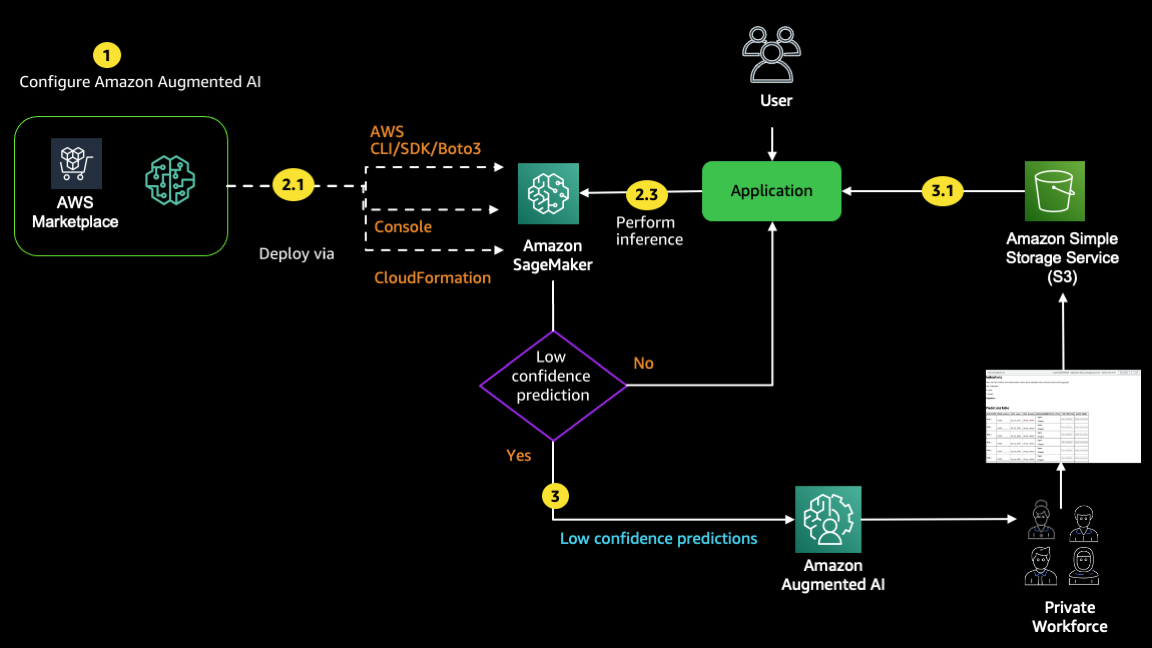

The following diagram shows the AWS services that are used in this notebook and the steps that you will perform. Here are the high level steps in this notebook: 1. Configure the human-in-loop review using Amazon A2I 1. Select, deploy, and invoke an AWS Marketplace ML model 1. Trigger the human review workflow in Amazon A2I. 1. The private workforce that was created in Amazon SageMaker Ground Truth reviews and edits the objects detected in the image.

Contents

Usage instructions

You can run this notebook one cell at a time (By using Shift+Enter for running a cell).

Prerequisites

This sample notebook requires a subscription to GluonCV SSD Object Detector, a pre-trained machine learning model package from AWS Marketplace. If your AWS account has not been subscribed to this listing, here is the process you can follow: 1. Open the listing from AWS Marketplace 1. Read the Highlights section and then product overview section of the listing. 1. View usage information and then additional resources. 1. Note the supported instance types. 1. Next, click on Continue to subscribe. 1. Review End-user license agreement, support terms, as well as pricing information. 1. The Accept Offer button needs to be selected if your organization agrees with EULA, pricing information as well as support terms. If the Continue to configuration button is active, it means your account already has a subscription to this listing. Once you select the Continue to configuration button and then choose region, you will see that a Product Arn will appear. This is the model package ARN that you need to specify in the following cell.

[ ]:

model_package_arn = "arn:aws:sagemaker:us-east-1:865070037744:model-package/gluoncv-ssd-resnet501547760463-0f9e6796d2438a1d64bb9b15aac57bc0" # Update as needed

This notebook requires the IAM role associated with this notebook to have AmazonSageMakerFullAccess IAM permission.

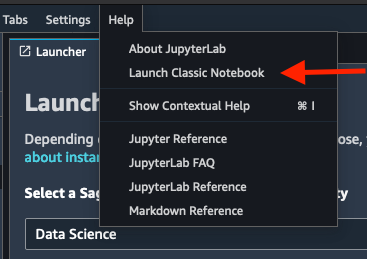

Note: If you want to run this notebook on AWS SageMaker Studio - please use Classic Jupyter mode to be able correctly render visualization. Pick instance type ‘ml.m4.xlarge’ or larger. Set kernel to ‘Data Science’.

Installing Dependencies

Import the libraries that are needed for this notebook.

[ ]:

# Import necessary libraries

import boto3

import json

import pandas as pd

import pprint

import requests

import sagemaker

import shutil

import time

import uuid

import PIL.Image

from IPython.display import Image

from IPython.display import Markdown as md

from sagemaker import get_execution_role

from sagemaker import ModelPackage

Setup Variables, Bucket and Paths

[ ]:

# Setting Role to the default SageMaker Execution Role

role = get_execution_role()

# Instantiate the SageMaker session and client that will be used throughout the notebook

sagemaker_session = sagemaker.Session()

sagemaker_client = sagemaker_session.sagemaker_client

# Fetch the region

region = sagemaker_session.boto_region_name

# Create S3 and A2I clients

s3 = boto3.client("s3", region)

a2i = boto3.client("sagemaker-a2i-runtime", region)

# Retrieve the current timestamp

timestamp = time.strftime("%Y-%m-%d-%H-%M-%S", time.gmtime())

# endpoint_name = '<ENDPOINT_NAME>'

endpoint_name = "gluoncv-object-detector"

# content_type='<CONTENT_TYPE>'

content_type = "image/jpeg"

# Instance size type to be used for making real-time predictions

real_time_inference_instance_type = "ml.m4.xlarge"

# Task UI name - this value is unique per account and region. You can also provide your own value here.

# task_ui_name = '<TASK_UI_NAME>'

task_ui_name = "ui-aws-marketplace-gluon-model-" + timestamp

# Flow definition name - this value is unique per account and region. You can also provide your own value here.

flow_definition_name = "fd-aws-marketplace-gluon-model-" + timestamp

# Name of the image file that will be used in object detection

image_file_name = "image.jpg"

[ ]:

# Create the sub-directory in the default S3 bucket

# that will store the results of the human-in-loop A2I review

bucket = sagemaker_session.default_bucket()

key = "a2i-results"

s3.put_object(Bucket=bucket, Key=(key + "/"))

output_path = f"s3://{bucket}/a2i-results"

print(f"Results of A2I will be stored in {output_path}.")

Step 1 Configure Amazon A2I service

In this section, you will create 3 resources: 1. Private workforce 2. Human-in-loop Console UI 3. Workflow definition

Step 1.1 Creating human review Workteam or Workforce

If you have already created a private work team, replace with the ARN of your work team. If you have never created a private work team, use the instructions below to create one. To learn more about using and managing private work teams, see Use a Private Workforce).

In the Amazon SageMaker console in the left sidebar under the Ground Truth heading, open the Labeling Workforces.

Choose Private, and then choose Create private team.

If you are a new user of SageMaker workforces, it is recommended you select Create a private work team with AWS Cognito.

For team name, enter “MyTeam”.

To add workers by email, select Invite new workers by email and paste or type a list of up to 50 email addresses, separated by commas, into the email addresses box. If you are following this notebook, specify an email account that you have access to. The system sends an invitation email, which allows users to authenticate and set up their profile for performing human-in-loop review.

Enter an organization name - this will be used to customize emails sent to your workers.

For contact email, enter an email address you have access to.

Select Create private team.

This will bring you back to the Private tab under labeling workforces, where you can view and manage your private teams and workers.

IMPORTANT: After you have created your workteam, from the Team summary section copy the value of the ARN and uncomment and replace ``<WORKTEAM_ARN>`` below:

[ ]:

# workteam_arn = '<WORKTEAM_ARN>'

Step 1.2 Create Human Task UI

Create a human task UI resource, giving a UI template in liquid HTML. This template will be rendered to the human workers whenever human loop is required.

For additional UI templates, check out this repository: https://github.com/aws-samples/amazon-a2i-sample-task-uis.

You will be using a slightly modified version of the object detection UI that provides support for the initial-value and labels variables in the template.

[ ]:

# Create task UI

# Read in the template from a local file

template = open("./src/worker-task-template.html").read()

human_task_ui_response = sagemaker_client.create_human_task_ui(

HumanTaskUiName=task_ui_name, UiTemplate={"Content": template}

)

human_task_ui_arn = human_task_ui_response["HumanTaskUiArn"]

print(human_task_ui_arn)

Step 1.3 Create the Flow Definition

In this section, you will create a flow definition. Flow Definitions allow you to specify:

The workforce that your tasks will be sent to.

The instructions that your workforce will receive. This is called a worker task template.

The configuration of your worker tasks, including the number of workers that receive a task and time limits to complete tasks.

Where your output data will be stored.

For more details and instructions, see: https://docs.aws.amazon.com/sagemaker/latest/dg/a2i-create-flow-definition.html.

[ ]:

create_workflow_definition_response = sagemaker_client.create_flow_definition(

FlowDefinitionName=flow_definition_name,

RoleArn=role,

HumanLoopConfig={

"WorkteamArn": workteam_arn,

"HumanTaskUiArn": human_task_ui_arn,

"TaskCount": 1,

"TaskDescription": "Identify and locate the object in an image.",

"TaskTitle": "Object detection Amazon A2I demo",

},

OutputConfig={"S3OutputPath": output_path},

)

flow_definition_arn = create_workflow_definition_response[

"FlowDefinitionArn"

] # let's save this ARN for future use

[ ]:

%%time

# Describe flow definition - status should be active

for x in range(60):

describe_flow_definition_response = sagemaker_client.describe_flow_definition(

FlowDefinitionName=flow_definition_name

)

print(describe_flow_definition_response["FlowDefinitionStatus"])

if describe_flow_definition_response["FlowDefinitionStatus"] == "Active":

print("Flow Definition is active")

break

time.sleep(2)

Step 2 Deploy and invoke AWS Marketplace model

In this section, you will stand up an Amazon SageMaker endpoint. Each endpoint must have a unique name which you can use for performing inference.

Step 2.1 Create an Endpoint

[ ]:

%%time

# Create a deployable model from the model package.

model = ModelPackage(

role=role,

model_package_arn=model_package_arn,

sagemaker_session=sagemaker_session,

predictor_cls=sagemaker.predictor.Predictor,

)

# Deploy the model

predictor = model.deploy(

initial_instance_count=1,

instance_type=real_time_inference_instance_type,

endpoint_name=endpoint_name,

)

It will take anywhere between 5 to 10 minutes to create the endpoint. Once the endpoint has been created, you would be able to perform real-time inference.

Step 2.2 Create input payload

In this step, you will prepare a payload to perform a prediction.

[ ]:

# Download the image file

# Open the url image, set stream to True, this will return the stream content.

r = requests.get("https://images.pexels.com/photos/763398/pexels-photo-763398.jpeg", stream=True)

# Open a local file with wb ( write binary ) permission to save it locally.

with open(image_file_name, "wb") as f:

shutil.copyfileobj(r.raw, f)

Resize the image and upload the file to S3 so that the image can be referenced from the worker console UI.

[ ]:

# Load the image

image = PIL.Image.open(image_file_name)

# Resize the image

resized_image = image.resize((600, 400))

# Save the resized image file locally

resized_image.save(image_file_name)

# Save file to S3

s3 = boto3.client("s3")

with open(image_file_name, "rb") as f:

s3.upload_fileobj(f, bucket, image_file_name)

# Display the image

from IPython.core.display import Image, display

Image(filename=image_file_name, width=600, height=400)

Step 2.3 Perform real-time inference

Submit the image file to the model and it will detect the objects in the image.

[ ]:

with open(image_file_name, "rb") as f:

payload = f.read()

response = sagemaker_session.sagemaker_runtime_client.invoke_endpoint(

EndpointName=endpoint_name, ContentType=content_type, Accept="json", Body=payload

)

result = json.loads(response["Body"].read().decode())

# Convert list to JSON

json_result = json.dumps(result)

df = pd.read_json(json_result)

# Display confidence scores < 0.90

df = df[df.score < 0.90]

print(df.head())

Step 3 Starting Human Loops

In a previous step, you have already submitted your image to the model for prediction and stored the output in JSON format in the result variable. You simply need to modify the X, Y coordinates of the bounding boxes. Additionally, you can filter out all predictions that are less than 90% accurate before submitting it to your human-in-loop review. This will insure that your model’s predictions are highly accurate and any additional detections of objects will be made by a human.

[ ]:

# Helper function to update X,Y coordinates and labels for the bounding boxes

def fix_boundingboxes(prediction_results, threshold=0.8):

bounding_boxes = []

labels = set()

for data in prediction_results:

label = data["id"]

labels.add(label)

if data["score"] > threshold:

width = data["right"] - data["left"]

height = data["bottom"] - data["top"]

top = data["top"]

left = data["left"]

bounding_boxes.append(

{"height": height, "width": width, "top": top, "left": left, "label": label}

)

return bounding_boxes, list(labels)

bounding_boxes, labels = fix_boundingboxes(result, threshold=0.9)

[ ]:

# Define the content that is passed into the human-in-loop workflow and console

human_loop_name = str(uuid.uuid4())

input_content = {

"initialValue": bounding_boxes, # the bounding box values that have been detected by model prediction

"taskObject": f"s3://{bucket}/"

+ image_file_name, # the s3 object will be passed to the worker task UI to render

"labels": labels, # the labels that are displayed in the legend

}

# Trigger the human-in-loop workflow

start_loop_response = a2i.start_human_loop(

HumanLoopName=human_loop_name,

FlowDefinitionArn=flow_definition_arn,

HumanLoopInput={"InputContent": json.dumps(input_content)},

)

Now that the human-in-loop review has been triggered, you can log into the worker console to work on the task and make edits and additions to the object detection bounding boxes from the image.

[ ]:

# Fetch the URL for the worker console UI

workteam_name = workteam_arn.split("/")[-1]

my_workteam = sagemaker_session.sagemaker_client.list_workteams(NameContains=workteam_name)

worker_console_url = "https://" + my_workteam["Workteams"][0]["SubDomain"]

md(

"### Click on the [Worker Console]({}) to begin reviewing the object detection".format(

worker_console_url

)

)

The below image shows the objects that were detected for the sample image that was used in this notebook by your model and displayed in the worker console.

|0d384f8b420d41c18f3c8562b925d101|

You can now make edits to the image to detect other objects. For example, in the image above, the model failed to detect the bicycle in the foreground with an accuracy of 90% or greater. However, as a human reviewer, you can clearly see the bicycle and can make a bounding box around it. Once you have finished with your edits, you can submit the result.

Step 3.1 View Task Results

Once work is completed, Amazon A2I stores results in your S3 bucket and sends a Cloudwatch event. Your results should be available in the S3 output_path that you specified when all work is completed. Note that the human answer, the label and the bounding box, is returned and saved in the JSON file.

NOTE: You must edit/submit the image in the Worker console so that its status is ``Completed``.

[ ]:

# Fetch the details about the human loop review in order to locate the JSON output on S3

resp = a2i.describe_human_loop(HumanLoopName=human_loop_name)

# Wait for the human-in-loop review to be completed

while True:

resp = a2i.describe_human_loop(HumanLoopName=human_loop_name)

print("-", sep="", end="", flush=True)

if resp["HumanLoopStatus"] == "Completed":

print("!")

break

time.sleep(2)

Once its status is Completed, you can execute the below cell to view the JSON output that is stored in S3. Under annotatedResult, any new bounding boxes will be included along with those that the model predicted, will be included. To learn more about the output data schema, please refer to the documentation about Output Data From Custom Task Types.

[ ]:

# Once the image has been submitted, display the JSON output that was sent to S3

bucket, key = resp["HumanLoopOutput"]["OutputS3Uri"].replace("s3://", "").split("/", 1)

response = s3.get_object(Bucket=bucket, Key=key)

content = response["Body"].read()

json_output = json.loads(content)

print(json.dumps(json_output, indent=1))

Step 4 Next Steps

Step 4.1 Additional Resources

You can explore additional machine learning models in AWS Marketplace - Machine Learning.

Learn more about Amazon Augmented AI

Other AWS blogs that may be of interest are:

Step 5 Clean up resources

In order to clean up the resources from this notebook,simply execute the below cells.

[ ]:

# Delete Workflow definition

sagemaker_client.delete_flow_definition(FlowDefinitionName=flow_definition_name)

[ ]:

# Delete Human Task UI

sagemaker_client.delete_human_task_ui(HumanTaskUiName=task_ui_name)

[ ]:

# Delete Endpoint

predictor.delete_endpoint()

[ ]:

# Delete Model

predictor.delete_model()

Finally, if you subscribed to AWS Marketplace model for an experiment and would like to unsubscribe, you can follow the steps below. Before you cancel the subscription, ensure that you do not have any deployable model created from the model package or using the algorithm. Note - You can find this information by looking at the container name associated with the model.

Steps to unsubscribe from the product on AWS Marketplace:

Navigate to Machine Learning tab on Your Software subscriptions page. Locate the listing that you would need to cancel, and click Cancel Subscription.

Notebook CI Test Results

This notebook was tested in multiple regions. The test results are as follows, except for us-west-2 which is shown at the top of the notebook.