Amazon SageMaker Studio Walkthrough

This notebook’s CI test result for us-west-2 is as follows. CI test results in other regions can be found at the end of the notebook.

Using Gradient Boosted Trees to Predict Mobile Customer Departure

This notebook walks you through some of the main features of Amazon SageMaker Studio.

-

Manage multiple trials

Experiment with hyperparameters and charting

-

Debug your model

-

Set up a persistent endpoint to get predictions from your model

-

Monitor the quality of your model

Set alerts for when model quality deviates

Run this notebook from within Studio. For Studio onboarding and set up instructions, see README.

Contents

Background - Predicting customer churn with XGBoost

Data - Prep the dataset and upload it to Amazon S3

Train - Train with the Amazon SageMaker XGBoost algorithm

Background

_This notebook has been adapted from an AWS blog post.

Losing customers is costly for any business. Identifying unhappy customers early on gives you a chance to offer them incentives to stay. This notebook describes using machine learning (ML) for automated identification of unhappy customers, also known as customer churn prediction. It uses Amazon SageMaker features for managing experiments, training the model, and monitoring the deployed model.

Let’s import the Python libraries we’ll need for this exercise.

[2]:

import sys

!{sys.executable} -m pip install -qU awscli boto3 "sagemaker>=1.71.0,<2.0.0"

!{sys.executable} -m pip install sagemaker-experiments

ERROR: aiobotocore 1.2.1 has requirement botocore<1.19.53,>=1.19.52, but you'll have botocore 1.20.81 which is incompatible.

Collecting sagemaker-experiments

Downloading sagemaker_experiments-0.1.31-py3-none-any.whl (42 kB)

|████████████████████████████████| 42 kB 1.3 MB/s eta 0:00:011

Requirement already satisfied: boto3>=1.16.27 in /opt/conda/lib/python3.7/site-packages (from sagemaker-experiments) (1.17.81)

Requirement already satisfied: botocore<1.21.0,>=1.20.81 in /opt/conda/lib/python3.7/site-packages (from boto3>=1.16.27->sagemaker-experiments) (1.20.81)

Requirement already satisfied: s3transfer<0.5.0,>=0.4.0 in /opt/conda/lib/python3.7/site-packages (from boto3>=1.16.27->sagemaker-experiments) (0.4.2)

Requirement already satisfied: jmespath<1.0.0,>=0.7.1 in /opt/conda/lib/python3.7/site-packages (from boto3>=1.16.27->sagemaker-experiments) (0.10.0)

Requirement already satisfied: urllib3<1.27,>=1.25.4 in /opt/conda/lib/python3.7/site-packages (from botocore<1.21.0,>=1.20.81->boto3>=1.16.27->sagemaker-experiments) (1.25.8)

Requirement already satisfied: python-dateutil<3.0.0,>=2.1 in /opt/conda/lib/python3.7/site-packages (from botocore<1.21.0,>=1.20.81->boto3>=1.16.27->sagemaker-experiments) (2.8.1)

Requirement already satisfied: six>=1.5 in /opt/conda/lib/python3.7/site-packages (from python-dateutil<3.0.0,>=2.1->botocore<1.21.0,>=1.20.81->boto3>=1.16.27->sagemaker-experiments) (1.14.0)

Installing collected packages: sagemaker-experiments

Successfully installed sagemaker-experiments-0.1.31

[3]:

import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

import io

import os

import sys

import time

import json

from IPython.display import display

from time import strftime, gmtime

import boto3

import re

import sagemaker

from sagemaker import get_execution_role

from sagemaker.predictor import csv_serializer

from sagemaker.debugger import rule_configs, Rule, DebuggerHookConfig

from sagemaker.model_monitor import DataCaptureConfig, DatasetFormat, DefaultModelMonitor

from sagemaker.s3 import S3Uploader, S3Downloader

from smexperiments.experiment import Experiment

from smexperiments.trial import Trial

from smexperiments.trial_component import TrialComponent

from smexperiments.tracker import Tracker

[4]:

sess = boto3.Session()

sm = sess.client("sagemaker")

role = sagemaker.get_execution_role()

Data

Mobile operators’ records show which customers ended up churning and which continued using the service. We can use this historical information to train an ML model that can predict customer churn. After training the model, we can pass the profile information of an arbitrary customer (the same profile information that we used to train the model) to the model, and have the model predict whether this customer will churn.

The dataset that we use is publicly available and was mentioned in the book Discovering Knowledge in Data by Daniel T. Larose. It’s attributed by the author to the University of California Irvine Repository of Machine Learning Datasets. The downloaded and preprocessed dataset is in the data folder that accompanies this notebook. It’s been split into training and validation datasets. To see how the dataset was preprocessed, see this XGBoost

customer churn notebook that starts with the original dataset.

We’ll train on a CSV file without the header. But for now, the following cell uses pandas to load some of the data from a version of the training data that has a header.

Explore the data to see the dataset’s features and what data will be used to train a the model.

[5]:

# Set the path we can find the data files that go with this notebook

%cd /root/amazon-sagemaker-examples/aws_sagemaker_studio/getting_started

local_data_path = "./data/training-dataset-with-header.csv"

data = pd.read_csv(local_data_path)

pd.set_option("display.max_columns", 500) # Make sure we can see all of the columns

pd.set_option("display.max_rows", 10) # Keep the output on one page

data

[Errno 2] No such file or directory: '/root/amazon-sagemaker-examples/aws_sagemaker_studio/getting_started'

/opt/ml/processing/input

[5]:

| Churn | Account Length | VMail Message | Day Mins | Day Calls | Eve Mins | Eve Calls | Night Mins | Night Calls | Intl Mins | Intl Calls | CustServ Calls | State_AK | State_AL | State_AR | State_AZ | State_CA | State_CO | State_CT | State_DC | State_DE | State_FL | State_GA | State_HI | State_IA | State_ID | State_IL | State_IN | State_KS | State_KY | State_LA | State_MA | State_MD | State_ME | State_MI | State_MN | State_MO | State_MS | State_MT | State_NC | State_ND | State_NE | State_NH | State_NJ | State_NM | State_NV | State_NY | State_OH | State_OK | State_OR | State_PA | State_RI | State_SC | State_SD | State_TN | State_TX | State_UT | State_VA | State_VT | State_WA | State_WI | State_WV | State_WY | Area Code_408 | Area Code_415 | Area Code_510 | Int'l Plan_no | Int'l Plan_yes | VMail Plan_no | VMail Plan_yes | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 106 | 0 | 274.4 | 120 | 198.6 | 82 | 160.8 | 62 | 6.0 | 3 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 |

| 1 | 0 | 28 | 0 | 187.8 | 94 | 248.6 | 86 | 208.8 | 124 | 10.6 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 | 1 | 0 |

| 2 | 1 | 148 | 0 | 279.3 | 104 | 201.6 | 87 | 280.8 | 99 | 7.9 | 2 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 1 | 0 |

| 3 | 0 | 132 | 0 | 191.9 | 107 | 206.9 | 127 | 272.0 | 88 | 12.6 | 2 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 |

| 4 | 0 | 92 | 29 | 155.4 | 110 | 188.5 | 104 | 254.9 | 118 | 8.0 | 4 | 3 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 0 | 1 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 2328 | 0 | 106 | 0 | 194.8 | 133 | 213.4 | 73 | 190.8 | 92 | 11.5 | 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 |

| 2329 | 1 | 125 | 0 | 143.2 | 80 | 88.1 | 94 | 233.2 | 135 | 8.8 | 7 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 |

| 2330 | 0 | 129 | 0 | 143.7 | 114 | 297.8 | 98 | 212.6 | 86 | 11.4 | 8 | 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 1 | 1 | 0 | 1 | 0 |

| 2331 | 0 | 159 | 0 | 198.8 | 107 | 195.5 | 91 | 213.3 | 120 | 16.5 | 7 | 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 0 | 1 | 0 |

| 2332 | 0 | 99 | 33 | 179.1 | 93 | 238.3 | 102 | 165.7 | 96 | 10.6 | 1 | 2 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 1 | 0 | 0 | 1 |

2333 rows × 70 columns

Now we’ll upload the files to S3 for training but first we will create an S3 bucket for the data if one does not already exist.

[6]:

account_id = sess.client("sts", region_name=sess.region_name).get_caller_identity()["Account"]

bucket = "sagemaker-studio-{}-{}".format(sess.region_name, account_id)

prefix = "xgboost-churn"

try:

if sess.region_name == "us-east-1":

sess.client("s3").create_bucket(Bucket=bucket)

else:

sess.client("s3").create_bucket(

Bucket=bucket, CreateBucketConfiguration={"LocationConstraint": sess.region_name}

)

except Exception as e:

print(

"Looks like you already have a bucket of this name. That's good. Uploading the data files..."

)

# Return the URLs of the uploaded file, so they can be reviewed or used elsewhere

s3url = S3Uploader.upload("data/train.csv", "s3://{}/{}/{}".format(bucket, prefix, "train"))

print(s3url)

s3url = S3Uploader.upload(

"data/validation.csv", "s3://{}/{}/{}".format(bucket, prefix, "validation")

)

print(s3url)

Looks like you already have a bucket of this name. That's good. Uploading the data files...

s3://sagemaker-studio-us-west-2-521695447989/xgboost-churn/train/train.csv

s3://sagemaker-studio-us-west-2-521695447989/xgboost-churn/validation/validation.csv

Train

Let’s move on to training. We’ll training a class of models known as gradient boosted decision trees on the data that we just uploaded using the XGBoost library.

Because we’re using XGBoost, we’ll first need to specify the locations of the XGBoost algorithm containers.

[7]:

from sagemaker.amazon.amazon_estimator import get_image_uri

docker_image_name = get_image_uri(boto3.Session().region_name, "xgboost", repo_version="0.90-2")

WARNING:sagemaker.amazon.amazon_estimator:'get_image_uri' method will be deprecated in favor of 'ImageURIProvider' class in SageMaker Python SDK v2.

WARNING:sagemaker.amazon.amazon_estimator:There is a more up to date SageMaker XGBoost image. To use the newer image, please set 'repo_version'='1.0-1'. For example:

get_image_uri(region, 'xgboost', '1.0-1').

Then, because we’re training with the CSV file format, we’ll create s3_inputs that our training function can use as a pointer to the files in S3.

[8]:

s3_input_train = sagemaker.s3_input(

s3_data="s3://{}/{}/train".format(bucket, prefix), content_type="csv"

)

s3_input_validation = sagemaker.s3_input(

s3_data="s3://{}/{}/validation/".format(bucket, prefix), content_type="csv"

)

WARNING:sagemaker:'s3_input' class will be renamed to 'TrainingInput' in SageMaker Python SDK v2.

WARNING:sagemaker:'s3_input' class will be renamed to 'TrainingInput' in SageMaker Python SDK v2.

Amazon SageMaker Experiments

Amazon SageMaker Experiments allows us to keep track of model training; organize related models together; and log model configuration, parameters, and metrics so we can reproduce and iterate on previously trained models and compare models. We’ll create a single experiment to keep track of the different approaches to training the model that we’ll try.

Each approach or block of training code we run will be an experiment trial. Later, we’ll compare different trials in Studio.

Let’s create the experiment.

[9]:

sess = sagemaker.session.Session()

create_date = strftime("%Y-%m-%d-%H-%M-%S", gmtime())

customer_churn_experiment = Experiment.create(

experiment_name="customer-churn-prediction-xgboost-{}".format(create_date),

description="Using xgboost to predict customer churn",

sagemaker_boto_client=boto3.client("sagemaker"),

)

Hyperparameters

Now, we can specify our XGBoost hyperparameters, including the following key hyperparameters: - max_depth Controls how deep each tree within the algorithm can be built. Deeper trees can lead to better fit, but are more computationally expensive and can lead to overfitting. Typically, you need to explore trade-offs in model performance between using a large number of shallow trees and a smaller number of deeper trees. - subsample Controls training data sampling. This hyperparameter can

help reduce overfitting, but setting it too low can also starve the model of data. - num_round Controls the number of boosting rounds. This is essentially the number of subsequent models that are trained using the residuals of previous iterations. More rounds can produce a better fit on the training data, but can be computationally expensive or lead to overfitting. - eta Controls how aggressive each round of boosting is. Larger values lead to more conservative boosting. - gamma

Controls how aggressively trees are grown. Larger values lead to more conservative models. - min_child_weight Also controls how aggresively trees are grown. Large values lead to a more conservative algorithm.

For more details, see XGBoost’s hyperparameters GitHub page.

[10]:

hyperparams = {

"max_depth": 5,

"subsample": 0.8,

"num_round": 600,

"eta": 0.2,

"gamma": 4,

"min_child_weight": 6,

"silent": 0,

"objective": "binary:logistic",

}

Trial 1 - XGBoost in algorithm mode

For our first trial, we’ll use the built-in xgboost container to train a model without providing additional code. This way, we can use XGBoost to train and deploy a model as we would with other Amazon SageMaker built-in algorithms.

We’ll create a new trial object for this trial and associate the trial with the experiment that we created earlier. To train the model, we’ll create an estimator and specify a few parameters, such as the type of training instances we’d like to use and how many and where to store the trained model artifacts.

We’ll also associate the training job with the experiment trial that we just created (when we call estimator.fit).

[11]:

trial = Trial.create(

trial_name="algorithm-mode-trial-{}".format(strftime("%Y-%m-%d-%H-%M-%S", gmtime())),

experiment_name=customer_churn_experiment.experiment_name,

sagemaker_boto_client=boto3.client("sagemaker"),

)

xgb = sagemaker.estimator.Estimator(

image_name=docker_image_name,

role=role,

hyperparameters=hyperparams,

train_instance_count=1,

train_instance_type="ml.m4.xlarge",

output_path="s3://{}/{}/output".format(bucket, prefix),

base_job_name="demo-xgboost-customer-churn",

sagemaker_session=sess,

)

xgb.fit(

{"train": s3_input_train, "validation": s3_input_validation},

experiment_config={

"ExperimentName": customer_churn_experiment.experiment_name,

"TrialName": trial.trial_name,

"TrialComponentDisplayName": "Training",

},

)

WARNING:sagemaker.estimator:Parameter image_name will be renamed to image_uri in SageMaker Python SDK v2.

INFO:sagemaker:Creating training-job with name: demo-xgboost-customer-churn-2021-05-27-00-07-55-255

2021-05-27 00:07:55 Starting - Starting the training job...

2021-05-27 00:07:58 Starting - Launching requested ML instances......

2021-05-27 00:09:10 Starting - Preparing the instances for training.........

2021-05-27 00:10:30 Downloading - Downloading input data......

2021-05-27 00:11:49 Training - Downloading the training image.........

2021-05-27 00:13:25 Uploading - Uploading generated training model

2021-05-27 00:13:25 Completed - Training job completed

INFO:sagemaker-containers:Imported framework sagemaker_xgboost_container.training

INFO:sagemaker-containers:Failed to parse hyperparameter objective value binary:logistic to Json.

Returning the value itself

INFO:sagemaker-containers:No GPUs detected (normal if no gpus installed)

INFO:sagemaker_xgboost_container.training:Running XGBoost Sagemaker in algorithm mode

INFO:root:Determined delimiter of CSV input is ','

INFO:root:Determined delimiter of CSV input is ','

INFO:root:Determined delimiter of CSV input is ','

[00:13:11] 2333x69 matrix with 160977 entries loaded from /opt/ml/input/data/train?format=csv&label_column=0&delimiter=,

INFO:root:Determined delimiter of CSV input is ','

[00:13:11] 666x69 matrix with 45954 entries loaded from /opt/ml/input/data/validation?format=csv&label_column=0&delimiter=,

INFO:root:Single node training.

INFO:root:Train matrix has 2333 rows

INFO:root:Validation matrix has 666 rows

[0]#011train-error:0.077154#011validation-error:0.099099

[1]#011train-error:0.050579#011validation-error:0.081081

[2]#011train-error:0.048864#011validation-error:0.075075

[3]#011train-error:0.046721#011validation-error:0.072072

[4]#011train-error:0.048007#011validation-error:0.073574

[5]#011train-error:0.046721#011validation-error:0.070571

[6]#011train-error:0.045435#011validation-error:0.073574

[7]#011train-error:0.043721#011validation-error:0.069069

[8]#011train-error:0.045006#011validation-error:0.069069

[9]#011train-error:0.042435#011validation-error:0.066066

[10]#011train-error:0.040291#011validation-error:0.064565

[11]#011train-error:0.039006#011validation-error:0.067568

[12]#011train-error:0.038577#011validation-error:0.064565

[13]#011train-error:0.03772#011validation-error:0.064565

[14]#011train-error:0.03772#011validation-error:0.066066

[15]#011train-error:0.039434#011validation-error:0.067568

[16]#011train-error:0.038577#011validation-error:0.063063

[17]#011train-error:0.03772#011validation-error:0.064565

[18]#011train-error:0.039434#011validation-error:0.063063

[19]#011train-error:0.039863#011validation-error:0.06006

[20]#011train-error:0.039434#011validation-error:0.063063

[21]#011train-error:0.038577#011validation-error:0.063063

[22]#011train-error:0.038148#011validation-error:0.066066

[23]#011train-error:0.036862#011validation-error:0.067568

[24]#011train-error:0.036005#011validation-error:0.067568

[25]#011train-error:0.034291#011validation-error:0.067568

[26]#011train-error:0.033862#011validation-error:0.069069

[27]#011train-error:0.033862#011validation-error:0.069069

[28]#011train-error:0.033862#011validation-error:0.067568

[29]#011train-error:0.033862#011validation-error:0.072072

[30]#011train-error:0.033862#011validation-error:0.070571

[31]#011train-error:0.034291#011validation-error:0.072072

[32]#011train-error:0.034719#011validation-error:0.070571

[33]#011train-error:0.034719#011validation-error:0.069069

[34]#011train-error:0.033005#011validation-error:0.069069

[35]#011train-error:0.033862#011validation-error:0.069069

[36]#011train-error:0.033862#011validation-error:0.069069

[37]#011train-error:0.033005#011validation-error:0.067568

[38]#011train-error:0.033433#011validation-error:0.067568

[39]#011train-error:0.033005#011validation-error:0.067568

[40]#011train-error:0.031719#011validation-error:0.069069

[41]#011train-error:0.031719#011validation-error:0.069069

[42]#011train-error:0.030862#011validation-error:0.069069

[43]#011train-error:0.03129#011validation-error:0.069069

[44]#011train-error:0.030862#011validation-error:0.067568

[45]#011train-error:0.03129#011validation-error:0.067568

[46]#011train-error:0.030862#011validation-error:0.066066

[47]#011train-error:0.030862#011validation-error:0.066066

[48]#011train-error:0.030433#011validation-error:0.066066

[49]#011train-error:0.030004#011validation-error:0.067568

[50]#011train-error:0.030004#011validation-error:0.067568

[51]#011train-error:0.030004#011validation-error:0.066066

[52]#011train-error:0.030004#011validation-error:0.066066

[53]#011train-error:0.030004#011validation-error:0.066066

[54]#011train-error:0.030004#011validation-error:0.066066

[55]#011train-error:0.030433#011validation-error:0.067568

[56]#011train-error:0.030433#011validation-error:0.067568

[57]#011train-error:0.030433#011validation-error:0.067568

[58]#011train-error:0.030433#011validation-error:0.067568

[59]#011train-error:0.030433#011validation-error:0.067568

[60]#011train-error:0.030433#011validation-error:0.067568

[61]#011train-error:0.030433#011validation-error:0.067568

[62]#011train-error:0.030433#011validation-error:0.067568

[63]#011train-error:0.030433#011validation-error:0.067568

[64]#011train-error:0.030433#011validation-error:0.067568

[65]#011train-error:0.030433#011validation-error:0.067568

[66]#011train-error:0.030433#011validation-error:0.067568

[67]#011train-error:0.030433#011validation-error:0.067568

[68]#011train-error:0.029147#011validation-error:0.067568

[69]#011train-error:0.029147#011validation-error:0.066066

[70]#011train-error:0.029147#011validation-error:0.067568

[71]#011train-error:0.028718#011validation-error:0.066066

[72]#011train-error:0.029147#011validation-error:0.066066

[73]#011train-error:0.029147#011validation-error:0.066066

[74]#011train-error:0.029147#011validation-error:0.066066

[75]#011train-error:0.029147#011validation-error:0.066066

[76]#011train-error:0.029147#011validation-error:0.066066

[77]#011train-error:0.029147#011validation-error:0.066066

[78]#011train-error:0.029576#011validation-error:0.066066

[79]#011train-error:0.030004#011validation-error:0.066066

[80]#011train-error:0.029576#011validation-error:0.066066

[81]#011train-error:0.02829#011validation-error:0.066066

[82]#011train-error:0.02829#011validation-error:0.066066

[83]#011train-error:0.02829#011validation-error:0.066066

[84]#011train-error:0.027861#011validation-error:0.066066

[85]#011train-error:0.027861#011validation-error:0.066066

[86]#011train-error:0.027861#011validation-error:0.066066

[87]#011train-error:0.027861#011validation-error:0.066066

[88]#011train-error:0.02829#011validation-error:0.066066

[89]#011train-error:0.02829#011validation-error:0.066066

[90]#011train-error:0.027861#011validation-error:0.066066

[91]#011train-error:0.027861#011validation-error:0.066066

[92]#011train-error:0.02829#011validation-error:0.066066

[93]#011train-error:0.027861#011validation-error:0.066066

[94]#011train-error:0.027861#011validation-error:0.066066

[95]#011train-error:0.027861#011validation-error:0.066066

[96]#011train-error:0.02829#011validation-error:0.066066

[97]#011train-error:0.02829#011validation-error:0.066066

[98]#011train-error:0.02829#011validation-error:0.066066

[99]#011train-error:0.02829#011validation-error:0.066066

[100]#011train-error:0.027432#011validation-error:0.064565

[101]#011train-error:0.027432#011validation-error:0.064565

[102]#011train-error:0.027432#011validation-error:0.064565

[103]#011train-error:0.027432#011validation-error:0.064565

[104]#011train-error:0.027432#011validation-error:0.064565

[105]#011train-error:0.027861#011validation-error:0.063063

[106]#011train-error:0.027861#011validation-error:0.063063

[107]#011train-error:0.027861#011validation-error:0.063063

[108]#011train-error:0.027861#011validation-error:0.063063

[109]#011train-error:0.027861#011validation-error:0.063063

[110]#011train-error:0.02829#011validation-error:0.063063

[111]#011train-error:0.02829#011validation-error:0.061562

[112]#011train-error:0.02829#011validation-error:0.063063

[113]#011train-error:0.02829#011validation-error:0.063063

[114]#011train-error:0.02829#011validation-error:0.063063

[115]#011train-error:0.02829#011validation-error:0.063063

[116]#011train-error:0.02829#011validation-error:0.063063

[117]#011train-error:0.027432#011validation-error:0.063063

[118]#011train-error:0.027432#011validation-error:0.063063

[119]#011train-error:0.027004#011validation-error:0.063063

[120]#011train-error:0.027004#011validation-error:0.063063

[121]#011train-error:0.027004#011validation-error:0.063063

[122]#011train-error:0.027432#011validation-error:0.063063

[123]#011train-error:0.027432#011validation-error:0.063063

[124]#011train-error:0.027432#011validation-error:0.063063

[125]#011train-error:0.027004#011validation-error:0.063063

[126]#011train-error:0.027004#011validation-error:0.063063

[127]#011train-error:0.027004#011validation-error:0.063063

[128]#011train-error:0.027432#011validation-error:0.063063

[129]#011train-error:0.027432#011validation-error:0.063063

[130]#011train-error:0.027432#011validation-error:0.063063

[131]#011train-error:0.027432#011validation-error:0.063063

[132]#011train-error:0.027432#011validation-error:0.063063

[133]#011train-error:0.027432#011validation-error:0.063063

[134]#011train-error:0.027432#011validation-error:0.063063

[135]#011train-error:0.027432#011validation-error:0.063063

[136]#011train-error:0.027432#011validation-error:0.063063

[137]#011train-error:0.027432#011validation-error:0.063063

[138]#011train-error:0.027432#011validation-error:0.063063

[139]#011train-error:0.027432#011validation-error:0.063063

[140]#011train-error:0.027432#011validation-error:0.064565

[141]#011train-error:0.027432#011validation-error:0.064565

[142]#011train-error:0.027432#011validation-error:0.063063

[143]#011train-error:0.027432#011validation-error:0.064565

[144]#011train-error:0.027432#011validation-error:0.064565

[145]#011train-error:0.027432#011validation-error:0.066066

[146]#011train-error:0.027432#011validation-error:0.066066

[147]#011train-error:0.027004#011validation-error:0.067568

[148]#011train-error:0.027004#011validation-error:0.064565

[149]#011train-error:0.027004#011validation-error:0.064565

[150]#011train-error:0.027004#011validation-error:0.067568

[151]#011train-error:0.027004#011validation-error:0.067568

[152]#011train-error:0.027432#011validation-error:0.069069

[153]#011train-error:0.027004#011validation-error:0.067568

[154]#011train-error:0.027432#011validation-error:0.069069

[155]#011train-error:0.027004#011validation-error:0.067568

[156]#011train-error:0.027004#011validation-error:0.067568

[157]#011train-error:0.027004#011validation-error:0.067568

[158]#011train-error:0.027004#011validation-error:0.069069

[159]#011train-error:0.027004#011validation-error:0.069069

[160]#011train-error:0.027004#011validation-error:0.069069

[161]#011train-error:0.027004#011validation-error:0.069069

[162]#011train-error:0.027004#011validation-error:0.069069

[163]#011train-error:0.027004#011validation-error:0.069069

[164]#011train-error:0.027004#011validation-error:0.069069

[165]#011train-error:0.027432#011validation-error:0.067568

[166]#011train-error:0.027432#011validation-error:0.067568

[167]#011train-error:0.027432#011validation-error:0.067568

[168]#011train-error:0.027432#011validation-error:0.067568

[169]#011train-error:0.027432#011validation-error:0.067568

[170]#011train-error:0.027432#011validation-error:0.067568

[171]#011train-error:0.027861#011validation-error:0.067568

[172]#011train-error:0.027861#011validation-error:0.067568

[173]#011train-error:0.027432#011validation-error:0.067568

[174]#011train-error:0.027432#011validation-error:0.067568

[175]#011train-error:0.027432#011validation-error:0.067568

[176]#011train-error:0.027432#011validation-error:0.067568

[177]#011train-error:0.027432#011validation-error:0.067568

[178]#011train-error:0.027432#011validation-error:0.067568

[179]#011train-error:0.026575#011validation-error:0.066066

[180]#011train-error:0.026575#011validation-error:0.066066

[181]#011train-error:0.027004#011validation-error:0.066066

[182]#011train-error:0.026575#011validation-error:0.064565

[183]#011train-error:0.027004#011validation-error:0.064565

[184]#011train-error:0.026147#011validation-error:0.064565

[185]#011train-error:0.026147#011validation-error:0.063063

[186]#011train-error:0.026147#011validation-error:0.063063

[187]#011train-error:0.026147#011validation-error:0.063063

[188]#011train-error:0.026147#011validation-error:0.063063

[189]#011train-error:0.026147#011validation-error:0.063063

[190]#011train-error:0.026147#011validation-error:0.061562

[191]#011train-error:0.026147#011validation-error:0.063063

[192]#011train-error:0.026147#011validation-error:0.061562

[193]#011train-error:0.025289#011validation-error:0.063063

[194]#011train-error:0.025289#011validation-error:0.063063

[195]#011train-error:0.025289#011validation-error:0.063063

[196]#011train-error:0.025289#011validation-error:0.063063

[197]#011train-error:0.025289#011validation-error:0.063063

[198]#011train-error:0.025289#011validation-error:0.063063

[199]#011train-error:0.025289#011validation-error:0.063063

[200]#011train-error:0.025289#011validation-error:0.063063

[201]#011train-error:0.025289#011validation-error:0.063063

[202]#011train-error:0.025289#011validation-error:0.063063

[203]#011train-error:0.025289#011validation-error:0.063063

[204]#011train-error:0.025289#011validation-error:0.063063

[205]#011train-error:0.025289#011validation-error:0.063063

[206]#011train-error:0.024861#011validation-error:0.063063

[207]#011train-error:0.024861#011validation-error:0.061562

[208]#011train-error:0.024861#011validation-error:0.063063

[209]#011train-error:0.024861#011validation-error:0.063063

[210]#011train-error:0.024861#011validation-error:0.061562

[211]#011train-error:0.024861#011validation-error:0.063063

[212]#011train-error:0.025289#011validation-error:0.063063

[213]#011train-error:0.025289#011validation-error:0.063063

[214]#011train-error:0.025289#011validation-error:0.06006

[215]#011train-error:0.025289#011validation-error:0.06006

[216]#011train-error:0.025289#011validation-error:0.06006

[217]#011train-error:0.025289#011validation-error:0.06006

[218]#011train-error:0.025289#011validation-error:0.06006

[219]#011train-error:0.025289#011validation-error:0.06006

[220]#011train-error:0.025289#011validation-error:0.06006

[221]#011train-error:0.025289#011validation-error:0.06006

[222]#011train-error:0.025289#011validation-error:0.06006

[223]#011train-error:0.025289#011validation-error:0.06006

[224]#011train-error:0.025289#011validation-error:0.06006

[225]#011train-error:0.025289#011validation-error:0.06006

[226]#011train-error:0.025289#011validation-error:0.06006

[227]#011train-error:0.025718#011validation-error:0.06006

[228]#011train-error:0.025289#011validation-error:0.06006

[229]#011train-error:0.025289#011validation-error:0.06006

[230]#011train-error:0.025289#011validation-error:0.06006

[231]#011train-error:0.025289#011validation-error:0.061562

[232]#011train-error:0.025289#011validation-error:0.06006

[233]#011train-error:0.025289#011validation-error:0.06006

[234]#011train-error:0.024861#011validation-error:0.06006

[235]#011train-error:0.024861#011validation-error:0.06006

[236]#011train-error:0.025289#011validation-error:0.06006

[237]#011train-error:0.025289#011validation-error:0.06006

[238]#011train-error:0.025289#011validation-error:0.06006

[239]#011train-error:0.025289#011validation-error:0.06006

[240]#011train-error:0.025289#011validation-error:0.061562

[241]#011train-error:0.025289#011validation-error:0.061562

[242]#011train-error:0.025289#011validation-error:0.061562

[243]#011train-error:0.025289#011validation-error:0.061562

[244]#011train-error:0.025289#011validation-error:0.061562

[245]#011train-error:0.025289#011validation-error:0.063063

[246]#011train-error:0.025289#011validation-error:0.06006

[247]#011train-error:0.024861#011validation-error:0.06006

[248]#011train-error:0.024861#011validation-error:0.06006

[249]#011train-error:0.025289#011validation-error:0.06006

[250]#011train-error:0.024861#011validation-error:0.06006

[251]#011train-error:0.025289#011validation-error:0.06006

[252]#011train-error:0.025289#011validation-error:0.06006

[253]#011train-error:0.025289#011validation-error:0.06006

[254]#011train-error:0.024861#011validation-error:0.06006

[255]#011train-error:0.024861#011validation-error:0.06006

[256]#011train-error:0.024432#011validation-error:0.06006

[257]#011train-error:0.024861#011validation-error:0.06006

[258]#011train-error:0.024861#011validation-error:0.06006

[259]#011train-error:0.024861#011validation-error:0.06006

[260]#011train-error:0.024861#011validation-error:0.06006

[261]#011train-error:0.024861#011validation-error:0.06006

[262]#011train-error:0.024432#011validation-error:0.06006

[263]#011train-error:0.024861#011validation-error:0.06006

[264]#011train-error:0.024861#011validation-error:0.06006

[265]#011train-error:0.025289#011validation-error:0.06006

[266]#011train-error:0.025289#011validation-error:0.06006

[267]#011train-error:0.025289#011validation-error:0.06006

[268]#011train-error:0.024861#011validation-error:0.06006

[269]#011train-error:0.024861#011validation-error:0.06006

[270]#011train-error:0.024861#011validation-error:0.06006

[271]#011train-error:0.024861#011validation-error:0.06006

[272]#011train-error:0.024861#011validation-error:0.06006

[273]#011train-error:0.025289#011validation-error:0.06006

[274]#011train-error:0.024861#011validation-error:0.06006

[275]#011train-error:0.024432#011validation-error:0.06006

[276]#011train-error:0.023575#011validation-error:0.06006

[277]#011train-error:0.023575#011validation-error:0.06006

[278]#011train-error:0.023575#011validation-error:0.06006

[279]#011train-error:0.023575#011validation-error:0.063063

[280]#011train-error:0.023575#011validation-error:0.06006

[281]#011train-error:0.023575#011validation-error:0.06006

[282]#011train-error:0.023575#011validation-error:0.06006

[283]#011train-error:0.023575#011validation-error:0.06006

[284]#011train-error:0.023575#011validation-error:0.06006

[285]#011train-error:0.023575#011validation-error:0.06006

[286]#011train-error:0.023575#011validation-error:0.06006

[287]#011train-error:0.023575#011validation-error:0.06006

[288]#011train-error:0.023575#011validation-error:0.06006

[289]#011train-error:0.023575#011validation-error:0.06006

[290]#011train-error:0.023575#011validation-error:0.06006

[291]#011train-error:0.023575#011validation-error:0.06006

[292]#011train-error:0.023575#011validation-error:0.06006

[293]#011train-error:0.023575#011validation-error:0.06006

[294]#011train-error:0.023575#011validation-error:0.06006

[295]#011train-error:0.023575#011validation-error:0.063063

[296]#011train-error:0.023575#011validation-error:0.063063

[297]#011train-error:0.023575#011validation-error:0.061562

[298]#011train-error:0.023575#011validation-error:0.061562

[299]#011train-error:0.023575#011validation-error:0.061562

[300]#011train-error:0.023575#011validation-error:0.061562

[301]#011train-error:0.023575#011validation-error:0.061562

[302]#011train-error:0.023575#011validation-error:0.061562

[303]#011train-error:0.023575#011validation-error:0.061562

[304]#011train-error:0.023146#011validation-error:0.064565

[305]#011train-error:0.023146#011validation-error:0.064565

[306]#011train-error:0.023146#011validation-error:0.064565

[307]#011train-error:0.023575#011validation-error:0.064565

[308]#011train-error:0.023575#011validation-error:0.064565

[309]#011train-error:0.023575#011validation-error:0.064565

[310]#011train-error:0.023575#011validation-error:0.064565

[311]#011train-error:0.023146#011validation-error:0.063063

[312]#011train-error:0.023146#011validation-error:0.063063

[313]#011train-error:0.023146#011validation-error:0.063063

[314]#011train-error:0.023146#011validation-error:0.063063

[315]#011train-error:0.022718#011validation-error:0.063063

[316]#011train-error:0.022718#011validation-error:0.064565

[317]#011train-error:0.022718#011validation-error:0.063063

[318]#011train-error:0.022718#011validation-error:0.063063

[319]#011train-error:0.023146#011validation-error:0.063063

[320]#011train-error:0.023146#011validation-error:0.063063

[321]#011train-error:0.023146#011validation-error:0.063063

[322]#011train-error:0.023146#011validation-error:0.063063

[323]#011train-error:0.023146#011validation-error:0.063063

[324]#011train-error:0.023146#011validation-error:0.063063

[325]#011train-error:0.022718#011validation-error:0.064565

[326]#011train-error:0.022718#011validation-error:0.064565

[327]#011train-error:0.022718#011validation-error:0.064565

[328]#011train-error:0.022718#011validation-error:0.064565

[329]#011train-error:0.022718#011validation-error:0.063063

[330]#011train-error:0.023146#011validation-error:0.063063

[331]#011train-error:0.023146#011validation-error:0.063063

[332]#011train-error:0.023146#011validation-error:0.063063

[333]#011train-error:0.023146#011validation-error:0.063063

[334]#011train-error:0.023146#011validation-error:0.063063

[335]#011train-error:0.023146#011validation-error:0.063063

[336]#011train-error:0.023146#011validation-error:0.063063

[337]#011train-error:0.023146#011validation-error:0.063063

[338]#011train-error:0.023146#011validation-error:0.063063

[339]#011train-error:0.023146#011validation-error:0.063063

[340]#011train-error:0.022718#011validation-error:0.063063

[341]#011train-error:0.022718#011validation-error:0.064565

[342]#011train-error:0.023146#011validation-error:0.063063

[343]#011train-error:0.023146#011validation-error:0.063063

[344]#011train-error:0.022718#011validation-error:0.063063

[345]#011train-error:0.023146#011validation-error:0.063063

[346]#011train-error:0.023146#011validation-error:0.063063

[347]#011train-error:0.023146#011validation-error:0.063063

[348]#011train-error:0.023146#011validation-error:0.063063

[349]#011train-error:0.023146#011validation-error:0.063063

[350]#011train-error:0.023146#011validation-error:0.063063

[351]#011train-error:0.023146#011validation-error:0.063063

[352]#011train-error:0.023146#011validation-error:0.063063

[353]#011train-error:0.023146#011validation-error:0.063063

[354]#011train-error:0.022718#011validation-error:0.063063

[355]#011train-error:0.023146#011validation-error:0.063063

[356]#011train-error:0.023146#011validation-error:0.063063

[357]#011train-error:0.022718#011validation-error:0.063063

[358]#011train-error:0.022289#011validation-error:0.061562

[359]#011train-error:0.022718#011validation-error:0.061562

[360]#011train-error:0.022718#011validation-error:0.061562

[361]#011train-error:0.022718#011validation-error:0.061562

[362]#011train-error:0.022718#011validation-error:0.061562

[363]#011train-error:0.022718#011validation-error:0.061562

[364]#011train-error:0.022289#011validation-error:0.063063

[365]#011train-error:0.023146#011validation-error:0.063063

[366]#011train-error:0.022718#011validation-error:0.063063

[367]#011train-error:0.022289#011validation-error:0.063063

[368]#011train-error:0.022289#011validation-error:0.061562

[369]#011train-error:0.022289#011validation-error:0.061562

[370]#011train-error:0.022289#011validation-error:0.061562

[371]#011train-error:0.022718#011validation-error:0.061562

[372]#011train-error:0.022289#011validation-error:0.061562

[373]#011train-error:0.022289#011validation-error:0.061562

[374]#011train-error:0.022289#011validation-error:0.061562

[375]#011train-error:0.022718#011validation-error:0.061562

[376]#011train-error:0.022718#011validation-error:0.061562

[377]#011train-error:0.022718#011validation-error:0.061562

[378]#011train-error:0.022718#011validation-error:0.061562

[379]#011train-error:0.022718#011validation-error:0.061562

[380]#011train-error:0.022718#011validation-error:0.061562

[381]#011train-error:0.022718#011validation-error:0.061562

[382]#011train-error:0.022718#011validation-error:0.061562

[383]#011train-error:0.022718#011validation-error:0.061562

[384]#011train-error:0.022289#011validation-error:0.063063

[385]#011train-error:0.022289#011validation-error:0.061562

[386]#011train-error:0.023146#011validation-error:0.063063

[387]#011train-error:0.023146#011validation-error:0.063063

[388]#011train-error:0.022289#011validation-error:0.063063

[389]#011train-error:0.022718#011validation-error:0.061562

[390]#011train-error:0.022718#011validation-error:0.061562

[391]#011train-error:0.022718#011validation-error:0.061562

[392]#011train-error:0.022718#011validation-error:0.061562

[393]#011train-error:0.022718#011validation-error:0.061562

[394]#011train-error:0.022718#011validation-error:0.061562

[395]#011train-error:0.022718#011validation-error:0.061562

[396]#011train-error:0.022718#011validation-error:0.061562

[397]#011train-error:0.022718#011validation-error:0.061562

[398]#011train-error:0.022289#011validation-error:0.061562

[399]#011train-error:0.022289#011validation-error:0.061562

[400]#011train-error:0.021432#011validation-error:0.061562

[401]#011train-error:0.021432#011validation-error:0.061562

[402]#011train-error:0.021432#011validation-error:0.061562

[403]#011train-error:0.021432#011validation-error:0.061562

[404]#011train-error:0.021432#011validation-error:0.061562

[405]#011train-error:0.021432#011validation-error:0.061562

[406]#011train-error:0.021432#011validation-error:0.061562

[407]#011train-error:0.021432#011validation-error:0.061562

[408]#011train-error:0.021432#011validation-error:0.061562

[409]#011train-error:0.021432#011validation-error:0.061562

[410]#011train-error:0.021432#011validation-error:0.061562

[411]#011train-error:0.021432#011validation-error:0.061562

[412]#011train-error:0.021432#011validation-error:0.061562

[413]#011train-error:0.021432#011validation-error:0.061562

[414]#011train-error:0.021432#011validation-error:0.061562

[415]#011train-error:0.021432#011validation-error:0.061562

[416]#011train-error:0.021432#011validation-error:0.061562

[417]#011train-error:0.021432#011validation-error:0.061562

[418]#011train-error:0.021432#011validation-error:0.061562

[419]#011train-error:0.021432#011validation-error:0.061562

[420]#011train-error:0.021432#011validation-error:0.061562

[421]#011train-error:0.021432#011validation-error:0.061562

[422]#011train-error:0.021432#011validation-error:0.061562

[423]#011train-error:0.021432#011validation-error:0.061562

[424]#011train-error:0.021432#011validation-error:0.061562

[425]#011train-error:0.021432#011validation-error:0.061562

[426]#011train-error:0.021432#011validation-error:0.061562

[427]#011train-error:0.021432#011validation-error:0.061562

[428]#011train-error:0.021432#011validation-error:0.061562

[429]#011train-error:0.021432#011validation-error:0.061562

[430]#011train-error:0.021432#011validation-error:0.061562

[431]#011train-error:0.021432#011validation-error:0.061562

[432]#011train-error:0.021432#011validation-error:0.061562

[433]#011train-error:0.021432#011validation-error:0.061562

[434]#011train-error:0.021432#011validation-error:0.061562

[435]#011train-error:0.021432#011validation-error:0.061562

[436]#011train-error:0.021432#011validation-error:0.061562

[437]#011train-error:0.021432#011validation-error:0.061562

[438]#011train-error:0.021432#011validation-error:0.061562

[439]#011train-error:0.021432#011validation-error:0.061562

[440]#011train-error:0.021432#011validation-error:0.061562

[441]#011train-error:0.021432#011validation-error:0.061562

[442]#011train-error:0.021432#011validation-error:0.061562

[443]#011train-error:0.021432#011validation-error:0.061562

[444]#011train-error:0.021432#011validation-error:0.061562

[445]#011train-error:0.021432#011validation-error:0.061562

[446]#011train-error:0.021432#011validation-error:0.061562

[447]#011train-error:0.021432#011validation-error:0.061562

[448]#011train-error:0.021432#011validation-error:0.061562

[449]#011train-error:0.021432#011validation-error:0.061562

[450]#011train-error:0.021432#011validation-error:0.061562

[451]#011train-error:0.021432#011validation-error:0.061562

[452]#011train-error:0.021432#011validation-error:0.061562

[453]#011train-error:0.021432#011validation-error:0.061562

[454]#011train-error:0.021432#011validation-error:0.063063

[455]#011train-error:0.021432#011validation-error:0.061562

[456]#011train-error:0.021432#011validation-error:0.061562

[457]#011train-error:0.021432#011validation-error:0.061562

[458]#011train-error:0.021432#011validation-error:0.061562

[459]#011train-error:0.021432#011validation-error:0.061562

[460]#011train-error:0.021432#011validation-error:0.061562

[461]#011train-error:0.021432#011validation-error:0.061562

[462]#011train-error:0.021432#011validation-error:0.061562

[463]#011train-error:0.021432#011validation-error:0.061562

[464]#011train-error:0.021432#011validation-error:0.061562

[465]#011train-error:0.021432#011validation-error:0.061562

[466]#011train-error:0.021432#011validation-error:0.061562

[467]#011train-error:0.021432#011validation-error:0.061562

[468]#011train-error:0.021432#011validation-error:0.061562

[469]#011train-error:0.021432#011validation-error:0.061562

[470]#011train-error:0.021432#011validation-error:0.061562

[471]#011train-error:0.021432#011validation-error:0.061562

[472]#011train-error:0.021432#011validation-error:0.06006

[473]#011train-error:0.021432#011validation-error:0.06006

[474]#011train-error:0.021432#011validation-error:0.06006

[475]#011train-error:0.021432#011validation-error:0.06006

[476]#011train-error:0.021432#011validation-error:0.06006

[477]#011train-error:0.021432#011validation-error:0.06006

[478]#011train-error:0.021432#011validation-error:0.06006

[479]#011train-error:0.021432#011validation-error:0.06006

[480]#011train-error:0.021432#011validation-error:0.06006

[481]#011train-error:0.021432#011validation-error:0.06006

[482]#011train-error:0.02186#011validation-error:0.06006

[483]#011train-error:0.021432#011validation-error:0.06006

[484]#011train-error:0.021003#011validation-error:0.058559

[485]#011train-error:0.021003#011validation-error:0.058559

[486]#011train-error:0.021432#011validation-error:0.058559

[487]#011train-error:0.021432#011validation-error:0.058559

[488]#011train-error:0.021003#011validation-error:0.058559

[489]#011train-error:0.021432#011validation-error:0.058559

[490]#011train-error:0.021003#011validation-error:0.058559

[491]#011train-error:0.021003#011validation-error:0.058559

[492]#011train-error:0.021003#011validation-error:0.058559

[493]#011train-error:0.021003#011validation-error:0.06006

[494]#011train-error:0.021003#011validation-error:0.058559

[495]#011train-error:0.021003#011validation-error:0.058559

[496]#011train-error:0.021003#011validation-error:0.058559

[497]#011train-error:0.021003#011validation-error:0.058559

[498]#011train-error:0.021003#011validation-error:0.058559

[499]#011train-error:0.021003#011validation-error:0.058559

[500]#011train-error:0.021003#011validation-error:0.058559

[501]#011train-error:0.021003#011validation-error:0.058559

[502]#011train-error:0.021003#011validation-error:0.058559

[503]#011train-error:0.021003#011validation-error:0.058559

[504]#011train-error:0.021003#011validation-error:0.058559

[505]#011train-error:0.021003#011validation-error:0.058559

[506]#011train-error:0.020574#011validation-error:0.06006

[507]#011train-error:0.020574#011validation-error:0.06006

[508]#011train-error:0.020574#011validation-error:0.06006

[509]#011train-error:0.020574#011validation-error:0.06006

[510]#011train-error:0.020574#011validation-error:0.06006

[511]#011train-error:0.020574#011validation-error:0.06006

[512]#011train-error:0.020574#011validation-error:0.06006

[513]#011train-error:0.020574#011validation-error:0.06006

[514]#011train-error:0.020574#011validation-error:0.06006

[515]#011train-error:0.020574#011validation-error:0.06006

[516]#011train-error:0.021003#011validation-error:0.06006

[517]#011train-error:0.021003#011validation-error:0.06006

[518]#011train-error:0.021003#011validation-error:0.06006

[519]#011train-error:0.021003#011validation-error:0.06006

[520]#011train-error:0.021003#011validation-error:0.06006

[521]#011train-error:0.020574#011validation-error:0.06006

[522]#011train-error:0.021003#011validation-error:0.06006

[523]#011train-error:0.021003#011validation-error:0.06006

[524]#011train-error:0.021003#011validation-error:0.06006

[525]#011train-error:0.021003#011validation-error:0.06006

[526]#011train-error:0.021003#011validation-error:0.06006

[527]#011train-error:0.021432#011validation-error:0.061562

[528]#011train-error:0.021432#011validation-error:0.061562

[529]#011train-error:0.021432#011validation-error:0.061562

[530]#011train-error:0.021432#011validation-error:0.061562

[531]#011train-error:0.021432#011validation-error:0.061562

[532]#011train-error:0.021432#011validation-error:0.061562

[533]#011train-error:0.021432#011validation-error:0.061562

[534]#011train-error:0.021432#011validation-error:0.061562

[535]#011train-error:0.021432#011validation-error:0.061562

[536]#011train-error:0.021432#011validation-error:0.061562

[537]#011train-error:0.021432#011validation-error:0.061562

[538]#011train-error:0.021432#011validation-error:0.061562

[539]#011train-error:0.021432#011validation-error:0.061562

[540]#011train-error:0.021432#011validation-error:0.061562

[541]#011train-error:0.021003#011validation-error:0.061562

[542]#011train-error:0.021432#011validation-error:0.061562

[543]#011train-error:0.021003#011validation-error:0.061562

[544]#011train-error:0.021003#011validation-error:0.061562

[545]#011train-error:0.021003#011validation-error:0.061562

[546]#011train-error:0.021003#011validation-error:0.061562

[547]#011train-error:0.021003#011validation-error:0.061562

[548]#011train-error:0.021003#011validation-error:0.061562

[549]#011train-error:0.021432#011validation-error:0.061562

[550]#011train-error:0.021432#011validation-error:0.061562

[551]#011train-error:0.021003#011validation-error:0.061562

[552]#011train-error:0.021003#011validation-error:0.061562

[553]#011train-error:0.021003#011validation-error:0.061562

[554]#011train-error:0.021003#011validation-error:0.061562

[555]#011train-error:0.021003#011validation-error:0.061562

[556]#011train-error:0.021003#011validation-error:0.061562

[557]#011train-error:0.021003#011validation-error:0.061562

[558]#011train-error:0.021003#011validation-error:0.061562

[559]#011train-error:0.021003#011validation-error:0.06006

[560]#011train-error:0.021003#011validation-error:0.06006

[561]#011train-error:0.021003#011validation-error:0.06006

[562]#011train-error:0.021003#011validation-error:0.061562

[563]#011train-error:0.021003#011validation-error:0.061562

[564]#011train-error:0.021003#011validation-error:0.061562

[565]#011train-error:0.021003#011validation-error:0.061562

[566]#011train-error:0.021003#011validation-error:0.061562

[567]#011train-error:0.021003#011validation-error:0.061562

[568]#011train-error:0.021003#011validation-error:0.061562

[569]#011train-error:0.020574#011validation-error:0.061562

[570]#011train-error:0.020574#011validation-error:0.061562

[571]#011train-error:0.020574#011validation-error:0.061562

[572]#011train-error:0.020574#011validation-error:0.061562

[573]#011train-error:0.020574#011validation-error:0.061562

[574]#011train-error:0.020574#011validation-error:0.061562

[575]#011train-error:0.020574#011validation-error:0.061562

[576]#011train-error:0.021003#011validation-error:0.061562

[577]#011train-error:0.020574#011validation-error:0.061562

[578]#011train-error:0.021003#011validation-error:0.061562

[579]#011train-error:0.021003#011validation-error:0.061562

[580]#011train-error:0.020574#011validation-error:0.061562

[581]#011train-error:0.020574#011validation-error:0.061562

[582]#011train-error:0.020574#011validation-error:0.061562

[583]#011train-error:0.020574#011validation-error:0.061562

[584]#011train-error:0.020574#011validation-error:0.061562

[585]#011train-error:0.021003#011validation-error:0.061562

[586]#011train-error:0.021003#011validation-error:0.061562

[587]#011train-error:0.021003#011validation-error:0.061562

[588]#011train-error:0.021003#011validation-error:0.061562

[589]#011train-error:0.020574#011validation-error:0.061562

[590]#011train-error:0.020574#011validation-error:0.061562

[591]#011train-error:0.020574#011validation-error:0.061562

[592]#011train-error:0.020574#011validation-error:0.061562

[593]#011train-error:0.020574#011validation-error:0.061562

[594]#011train-error:0.020574#011validation-error:0.061562

[595]#011train-error:0.020574#011validation-error:0.061562

[596]#011train-error:0.020574#011validation-error:0.061562

[597]#011train-error:0.020574#011validation-error:0.061562

[598]#011train-error:0.020574#011validation-error:0.061562

[599]#011train-error:0.021003#011validation-error:0.061562

Training seconds: 175

Billable seconds: 175

Review the results

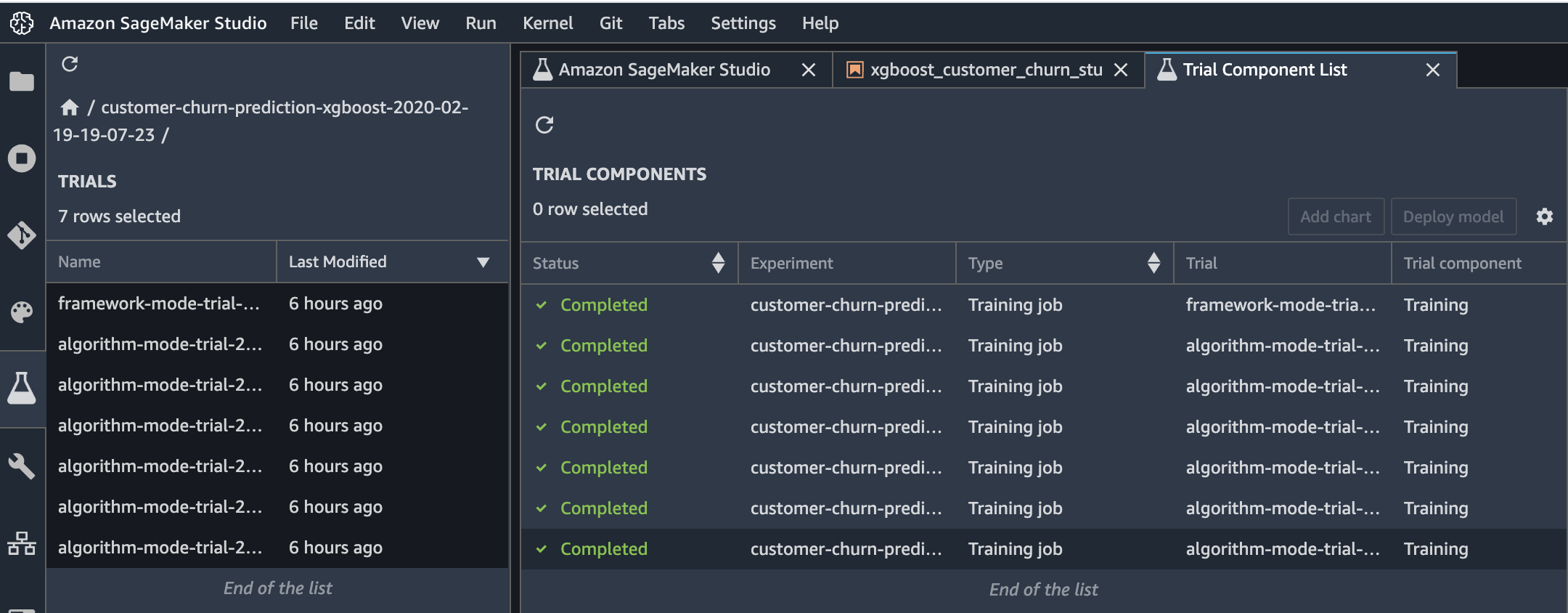

After the training job succeeds, you can view metrics, logs, and graphs for the trial on the Experiments tab in Studio.

To view them, choose the Experiments button.

![]()

To see the components of a specific experiment, in the Experiments list, double-click it. If you want to see the components of multiple experiments, select them with Ctrl-click, then right-click on an experiment to see the context menu. To see all of the compenents together, choose “Open in trial component list”. This enables charting across experiments.

The components are sorted so that the best model is at the top.

Download the model

You can also find and download the model that was trained. To find the model, choose the Experiments icon in the left tray, and keep drilling down through the experiment, the most recent trial, and its most recent component until you see the Describe Trial Components page. To see links to the training and validation datasets, choose the Artifacts tab. The links are listed in the “Input Artifacts” section. The generated model artifact is in the “Output Artifacts” section.

Trying other hyperparameter values

To improve a model, you typically try other hyperparameter values to see if they affect the final validation error. Let’s change the min_child_weight value and start other training jobs with those values to see how they affect the validation error. For each min_child_weight value, we’ll create a separate trial so that we can compare the results in Studio.

[12]:

min_child_weights = [1, 2, 4, 8, 10]

for weight in min_child_weights:

hyperparams["min_child_weight"] = weight

trial = Trial.create(

trial_name="algorithm-mode-trial-{}-weight-{}".format(

strftime("%Y-%m-%d-%H-%M-%S", gmtime()), weight

),

experiment_name=customer_churn_experiment.experiment_name,

sagemaker_boto_client=boto3.client("sagemaker"),

)

t_xgb = sagemaker.estimator.Estimator(

image_name=docker_image_name,

role=role,

hyperparameters=hyperparams,

train_instance_count=1,

train_instance_type="ml.m4.xlarge",

output_path="s3://{}/{}/output".format(bucket, prefix),

base_job_name="demo-xgboost-customer-churn",

sagemaker_session=sess,

)

t_xgb.fit(

{"train": s3_input_train, "validation": s3_input_validation},

wait=False,

experiment_config={

"ExperimentName": customer_churn_experiment.experiment_name,

"TrialName": trial.trial_name,

"TrialComponentDisplayName": "Training",

},

)

WARNING:sagemaker.estimator:Parameter image_name will be renamed to image_uri in SageMaker Python SDK v2.

INFO:sagemaker:Creating training-job with name: demo-xgboost-customer-churn-2021-05-27-00-13-38-354

WARNING:sagemaker.estimator:Parameter image_name will be renamed to image_uri in SageMaker Python SDK v2.

INFO:sagemaker:Creating training-job with name: demo-xgboost-customer-churn-2021-05-27-00-13-38-613

WARNING:sagemaker.estimator:Parameter image_name will be renamed to image_uri in SageMaker Python SDK v2.

INFO:sagemaker:Creating training-job with name: demo-xgboost-customer-churn-2021-05-27-00-13-39-527

WARNING:sagemaker.estimator:Parameter image_name will be renamed to image_uri in SageMaker Python SDK v2.

INFO:sagemaker:Creating training-job with name: demo-xgboost-customer-churn-2021-05-27-00-13-40-588

WARNING:sagemaker.estimator:Parameter image_name will be renamed to image_uri in SageMaker Python SDK v2.

INFO:sagemaker:Creating training-job with name: demo-xgboost-customer-churn-2021-05-27-00-13-41-332

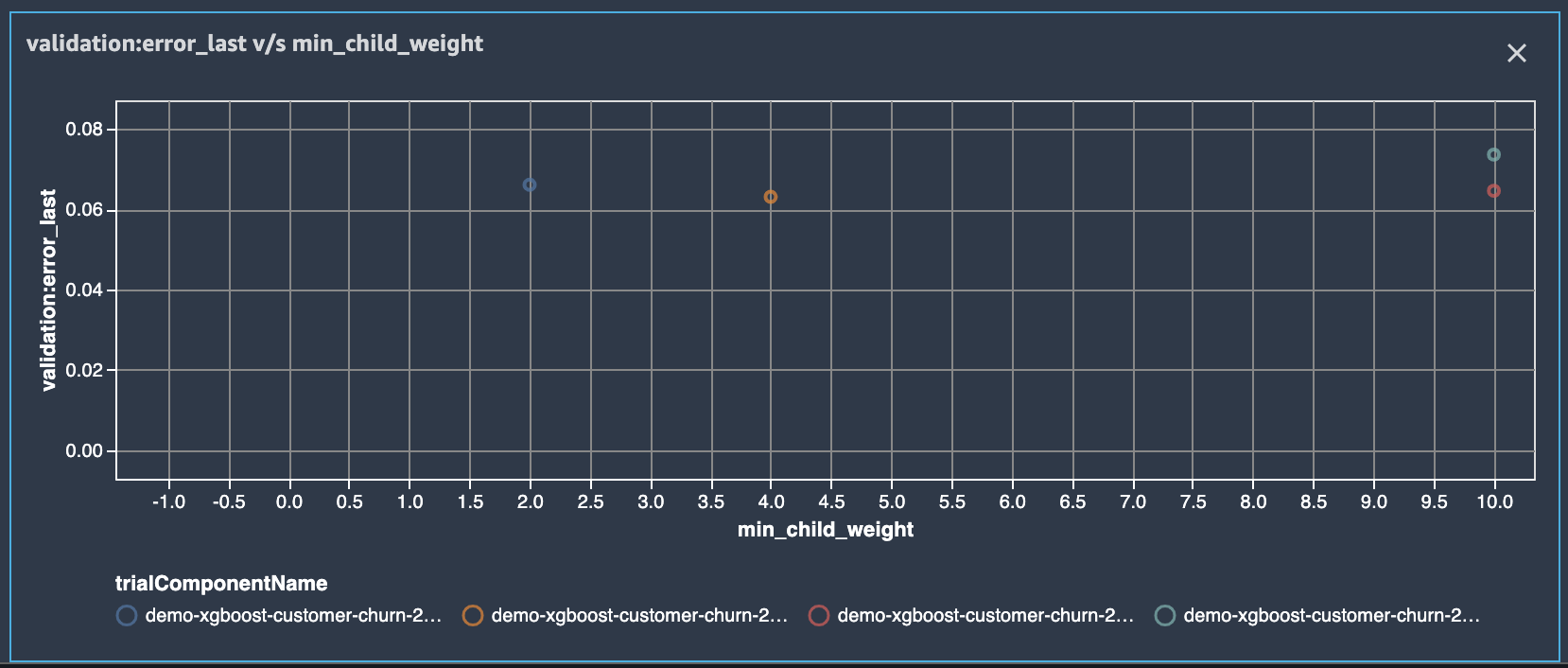

Create charts

To create charts, you multi-select the components. Because this is a sample training and the data is quite sparse, there’s not much to chart in this time series. However, you can create a scatter plot for the parameter sweep. The following image is an example.

How to create a scatter plot

Multi-select the components, then choose “Add chart”. In the Chart properties panel, for Data type, choose “Summary Statistics”. For Chart type, choose scatter plot. Then choose the min_child_wight hyperparameter as the X-axis (because this is the hyperparameter that you’re iterating on in this notebook). For Y-axis metrics, choose either validation:error_last or validation:error_avg), and then color them by trialComponentName.

You can also adjust the chart as you go by choosing other components and zooming in and out. Each item on the graph will display contextual info.

Amazon SageMaker Debugger

With Amazon SageMaker Debugger, you can debug models during training. During training, Debugger periodicially saves tensors, which specify the state of the model at that point in time. Debugger saves the tensors to Amazon S3 for analysis and visualization. This allows you to diagnose training issues with Studio.

Specify Debugger rules

To enable automated detection of common issues during training, you can attach a list of rules to evaluate the training job against.

Some rule configurationss that apply to XGBoost include AllZero, ClassImbalance, Confusion, LossNotDecreasing, Overfit, Overtraining, SimilarAcrossRuns, TensorVariance, UnchangedTensor, TreeDepth.

Let’s use the LossNotDecreasing rule–which is triggered if the loss doesn’t decrease monotonically at any point during training–the Overtraining rule, and the Overfit rule.

[13]:

debug_rules = [

Rule.sagemaker(rule_configs.loss_not_decreasing()),

Rule.sagemaker(rule_configs.overtraining()),

Rule.sagemaker(rule_configs.overfit()),

]

Trial 2 - XGBoost in framework mode

For the next trial, we’ll train a similar model, but we’ll use XGBoost in framework mode. If you’ve worked with the open source XGBoost framework, this way of using XGBoost should be familiar to you. Using XGBoost as a framework provides more flexibility than using it as a built-in algorithm because it enables more advanced scenarios that allow you to incorporate preprocessing and post-processing scripts into your training script. Specifically, we’ll be able to specify a list of rules that we want Debugger to evaluate our training process against.

Fit estimator

To use XGBoost as a framework, you need to specify an entry-point script that can incorporate additional processing into your training jobs.

We’ve made a couple of simple changes to enable the Debugger smdebug. We created a SessionHook which we pass as a callback function when creating a Booster. We passed a SaveConfig object that tells the hook to save the evaluation metrics, feature importances, and SHAP values at regular intervals. Debugger is highly configurable, so you can choose exactly what to save. We describe the changes in more detail after we train this example. For even more detail, see the Developer Guide for

XGBoost.

[14]:

!pygmentize xgboost_customer_churn.py

import argparse

import json

import os

import pickle

import random

import tempfile

import urllib.request

import xgboost

from smdebug import SaveConfig

from smdebug.xgboost import Hook

def parse_args():

parser = argparse.ArgumentParser()

parser.add_argument("--max_depth", type=int, default=5)

parser.add_argument("--eta", type=float, default=0.2)

parser.add_argument("--gamma", type=int, default=4)

parser.add_argument("--min_child_weight", type=int, default=6)

parser.add_argument("--subsample", type=float, default=0.8)

parser.add_argument("--silent", type=int, default=0)

parser.add_argument("--objective", type=str, default="binary:logistic")

parser.add_argument("--num_round", type=int, default=50)

parser.add_argument("--smdebug_path", type=str, default=None)

parser.add_argument("--smdebug_frequency", type=int, default=1)

parser.add_argument("--smdebug_collections", type=str, default="metrics")

parser.add_argument(

"--output_uri",

type=str,

default="/opt/ml/output/tensors",

help="S3 URI of the bucket where tensor data will be stored.",

)

parser.add_argument("--train", type=str, default=os.environ.get("SM_CHANNEL_TRAIN"))

parser.add_argument("--validation", type=str, default=os.environ.get("SM_CHANNEL_VALIDATION"))

parser.add_argument("--model-dir", type=str, default=os.environ["SM_MODEL_DIR"])

args = parser.parse_args()

return args

def create_smdebug_hook(

out_dir,

train_data=None,

validation_data=None,

frequency=1,

collections=None,

):

save_config = SaveConfig(save_interval=frequency)

hook = Hook(

out_dir=out_dir,

train_data=train_data,

validation_data=validation_data,

save_config=save_config,

include_collections=collections,

)

return hook

def main():

args = parse_args()

train, validation = args.train, args.validation

parse_csv = "?format=csv&label_column=0"

dtrain = xgboost.DMatrix(train + parse_csv)

dval = xgboost.DMatrix(validation + parse_csv)

watchlist = [(dtrain, "train"), (dval, "validation")]

params = {

"max_depth": args.max_depth,

"eta": args.eta,

"gamma": args.gamma,

"min_child_weight": args.min_child_weight,

"subsample": args.subsample,

"silent": args.silent,

"objective": args.objective,

}

# The output_uri is a the URI for the s3 bucket where the metrics will be

# saved.

output_uri = args.smdebug_path if args.smdebug_path is not None else args.output_uri

collections = (

args.smdebug_collections.split(",") if args.smdebug_collections is not None else None

)

hook = create_smdebug_hook(

out_dir=output_uri,

frequency=args.smdebug_frequency,

collections=collections,

train_data=dtrain,

validation_data=dval,

)

bst = xgboost.train(

params=params,

dtrain=dtrain,

evals=watchlist,

num_boost_round=args.num_round,

callbacks=[hook],

)

if not os.path.exists(args.model_dir):

os.makedirs(args.model_dir)

model_location = os.path.join(args.model_dir, "xgboost-model")

pickle.dump(bst, open(model_location, "wb"))

if __name__ == "__main__":

main()

def model_fn(model_dir):

"""Load a model. For XGBoost Framework, a default function to load a model is not provided.

Users should provide customized model_fn() in script.

Args:

model_dir: a directory where model is saved.

Returns:

A XGBoost model.

XGBoost model format type.

"""

model_files = (

file for file in os.listdir(model_dir) if os.path.isfile(os.path.join(model_dir, file))

)

model_file = next(model_files)

try:

booster = pickle.load(open(os.path.join(model_dir, model_file), "rb"))

format = "pkl_format"

except Exception as exp_pkl:

try:

booster = xgboost.Booster()

booster.load_model(os.path.join(model_dir, model_file))

format = "xgb_format"

except Exception as exp_xgb:

raise ModelLoadInferenceError(

"Unable to load model: {} {}".format(str(exp_pkl), str(exp_xgb))

)

booster.set_param("nthread", 1)

return booster, format

Let’s create our framwork estimator and call fit to start the training job. As before, we’ll create a separate trial for this run so that we can use Studio to compare it with other trials. Because we are running in framework mode, we also need to pass additional parameters, like the entry point script and the framework version, to the estimator.

As training progresses, you can see logs as Debugger evaluates the rule against the training job.

[ ]:

entry_point_script = "xgboost_customer_churn.py"

trial = Trial.create(

trial_name="framework-mode-trial-{}".format(strftime("%Y-%m-%d-%H-%M-%S", gmtime())),

experiment_name=customer_churn_experiment.experiment_name,

sagemaker_boto_client=boto3.client("sagemaker"),

)

framework_xgb = sagemaker.xgboost.XGBoost(

image_name=docker_image_name,

entry_point=entry_point_script,

role=role,

framework_version="0.90-2",

py_version="py3",

hyperparameters=hyperparams,

train_instance_count=1,

train_instance_type="ml.m4.xlarge",

output_path="s3://{}/{}/output".format(bucket, prefix),

base_job_name="demo-xgboost-customer-churn",

sagemaker_session=sess,

rules=debug_rules,

)

framework_xgb.fit(

{"train": s3_input_train, "validation": s3_input_validation},

experiment_config={

"ExperimentName": customer_churn_experiment.experiment_name,

"TrialName": trial.trial_name,

"TrialComponentDisplayName": "Training",

},

)

After the training has been running for a while you can view debug info in the Debugger panel. To get to this panel you must click through the experiment, trial, and then component.

Host the model

Now that we’ve trained the model, let’s deploy it to a hosted endpoint. To monitor the model after it’s hosted and serving requests, we will also add configurations to capture data that is being sent to the endpoint.

[ ]:

data_capture_prefix = "{}/datacapture".format(prefix)

endpoint_name = "demo-xgboost-customer-churn-" + strftime("%Y-%m-%d-%H-%M-%S", gmtime())

print("EndpointName = {}".format(endpoint_name))

[ ]:

xgb_predictor = xgb.deploy(

initial_instance_count=1,

instance_type="ml.m4.xlarge",

endpoint_name=endpoint_name,

data_capture_config=DataCaptureConfig(

enable_capture=True,

sampling_percentage=100,

destination_s3_uri="s3://{}/{}".format(bucket, data_capture_prefix),

),

)

Invoke the deployed model

Now that we have a hosted endpoint running, we can make real-time predictions from our model by making an HTTP POST request. But first, we’ll need to set up serializers and deserializers for passing our test_data NumPy arrays to the model behind the endpoint.

[ ]:

xgb_predictor.content_type = "text/csv"

xgb_predictor.serializer = csv_serializer

xgb_predictor.deserializer = None

Now, we’ll loop over our test dataset and collect predictions by invoking the XGBoost endpoint:

[ ]:

print(

"Sending test traffic to the endpoint {}. \nPlease wait for a minute...".format(endpoint_name)

)

with open("data/test_sample.csv", "r") as f:

for row in f:

payload = row.rstrip("\n")

response = xgb_predictor.predict(data=payload)

time.sleep(0.5)

Verify data capture in Amazon S3

Because we made some real-time predictions by sending data to our endpoint, we should have also captured that data for monitoring purposes.

Let’s list the data capture files stored in Amazon S3. Expect to see different files from different time periods organized by the hour in which the invocation occurred. The format of the S3 path is:

s3://{destination-bucket-prefix}/{endpoint-name}/{variant-name}/yyyy/mm/dd/hh/filename.jsonl

[ ]:

from time import sleep

current_endpoint_capture_prefix = "{}/{}".format(data_capture_prefix, endpoint_name)

for _ in range(12): # wait up to a minute to see captures in S3

capture_files = S3Downloader.list("s3://{}/{}".format(bucket, current_endpoint_capture_prefix))

if capture_files:

break

sleep(5)

print("Found Data Capture Files:")

print(capture_files)

All the data captured is stored in a SageMaker specific json-line formatted file. Next, Let’s take a quick peek at the contents of a single line in a pretty formatted json so that we can observe the format a little better.

[ ]:

capture_file = S3Downloader.read_file(capture_files[-1])

print("=====Single Data Capture====")

print(json.dumps(json.loads(capture_file.split("\n")[0]), indent=2)[:2000])

As you can see, each inference request is captured in one line in the jsonl file. The line contains both the input and output merged together. In our example, we provided the ContentType as text/csv which is reflected in the observedContentType value. Also, we expose the enconding that we used to encode the input and output payloads in the capture format with the encoding value.

To recap, we have observed how you can enable capturing the input and/or output payloads to an Endpoint with a new parameter. We have also observed how the captured format looks like in S3. Let’s continue to explore how SageMaker helps with monitoring the data collected in S3.

Amazon SageMaker Model Monitor

In addition to collecting data, Amazon SageMaker lets you to monitor and evaluate the data observed by the endpoints with Amazon SageMaker Model Monitor. For this, we need to: 1. Create a baseline to compare against real-time traffic. 1. When the baseline is ready, set up a schedule to continously evaluate and compare against the baseline. 1. Send synthetic traffic to trigger alarms.

Important: It takes one hour or more to complete this section because the shortest monitoring polling time is one hour. To see how it looks after running for a few hours and some of the synthetic traffic triggered errors, see the following graphic.

Baselining and continous monitoring

1. Suggest baseline constraints with the training dataset

The training dataset that you used to train the model is usually a good baseline dataset. Note that the training dataset data schema and the inference dataset schema should match exactly (for example, the number and type of the features).

From our training dataset, let’s ask Amazon SageMaker to suggest a set of baseline constraints and generate descriptive statistics to explore the data. For this example, let’s upload the training dataset that we used to train the model. We’ll use the dataset file with column headers so that we have descriptive feature names.

[ ]:

baseline_prefix = prefix + "/baselining"

baseline_data_prefix = baseline_prefix + "/data"

baseline_results_prefix = baseline_prefix + "/results"

baseline_data_uri = "s3://{}/{}".format(bucket, baseline_data_prefix)

baseline_results_uri = "s3://{}/{}".format(bucket, baseline_results_prefix)

print("Baseline data uri: {}".format(baseline_data_uri))

print("Baseline results uri: {}".format(baseline_results_uri))

baseline_data_path = S3Uploader.upload("data/training-dataset-with-header.csv", baseline_data_uri)

Create a baselining job with the training dataset

Now that we have the training data ready in Amazon S3, let’s start a job to suggest constraints. To generate the constraints, the convenient helper starts a ProcessingJob using a ProcessingJob container provided by Amazon SageMaker.

[ ]:

my_default_monitor = DefaultModelMonitor(

role=role,

instance_count=1,

instance_type="ml.m5.xlarge",

volume_size_in_gb=20,

max_runtime_in_seconds=3600,

)

baseline_job = my_default_monitor.suggest_baseline(

baseline_dataset=baseline_data_path,

dataset_format=DatasetFormat.csv(header=True),

output_s3_uri=baseline_results_uri,

wait=True,

)

Once the job succeeds, we can explore the baseline_results_uri location in s3 to see what files where stored there.

[ ]:

print("Found Files:")

S3Downloader.list("s3://{}/{}".format(bucket, baseline_results_prefix))

We have aconstraints.json file that has information about suggested constraints. We also have a statistics.json which contains statistical information about the data in the baseline.

[ ]:

baseline_job = my_default_monitor.latest_baselining_job

schema_df = pd.io.json.json_normalize(baseline_job.baseline_statistics().body_dict["features"])

schema_df.head(10)

[ ]:

constraints_df = pd.io.json.json_normalize(

baseline_job.suggested_constraints().body_dict["features"]

)

constraints_df.head(10)

2. Analyzing subsequent captures for data quality issues

Now that we have generated a baseline dataset and processed the baseline dataset to get baseline statistics and constraints, let’s monitor and analyze the data that is being sent to the endpoint with monitoring schedules.

Create a schedule

Create a monitoring schedule for the previously created endpoint. The schedule specifies the cadence at which we run a new processing job to compare recent data captures to the baseline.

[ ]:

# First, copy over some test scripts to the S3 bucket so that they can be used for pre and post processing

code_prefix = "{}/code".format(prefix)

pre_processor_script = S3Uploader.upload(

"preprocessor.py", "s3://{}/{}".format(bucket, code_prefix)

)

s3_code_postprocessor_uri = S3Uploader.upload(

"postprocessor.py", "s3://{}/{}".format(bucket, code_prefix)

)

We are ready to create a model monitoring schedule for the Endpoint created before and also the baseline resources (constraints and statistics) which were generated above.

[ ]:

from sagemaker.model_monitor import CronExpressionGenerator

from time import gmtime, strftime

reports_prefix = "{}/reports".format(prefix)